Yesterday we took delivery of a DataOn DNS-1640D JBOD tray with 8 * 600 GB 10K disks and 2 * 400 GB dual channel SSDs. This is going to be the heart of V2 of the lab at work, providing me with physical scalable and continuously available storage.

The JBOD

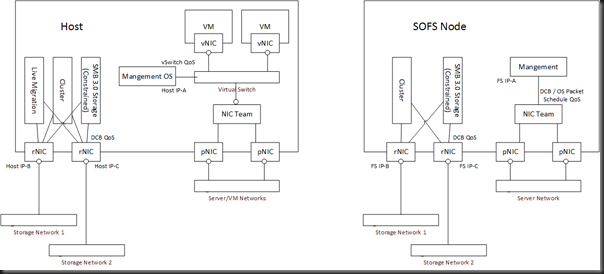

Below you can see the architecture of the setup. Let’s start with the DataOn JBOD. It has dual controllers and dual PSUs. Each controller has some management ports for factory usage (not shown). In a simple non-stacked solution such as below, you’ll use SAS ports 1 and 2 to connect your servers. A SAS daisy chaining port is included to allow you to expand this JBOD to multiple trays. Note that if scaling out the JBOD is on the cards then look at the much bigger models – this one takes 24 2.5” disks.

I don’t know why people still think that SOFS disks go into the servers – THEY GO INTO A SHARED JBOD!!! Storage inside a server cannot be HA; there is no replication or striping of internal disks between servers. In this case we have inserted 8 * 600 GB 10K HDDs (capacity at a budget) and 2 STEC 400 GB SSDs (speed). This will allow us to implement WS2012 R2 Storage Spaces tiered storage and write-back cache.

The Servers

I’m recycling the 2 servers that I’ve been using as Hyper-V hosts for the last year and a half. They’re HP DL360 servers. Sadly, HP Proliants are stuck in the year 2009 and I can’t use them to demonstrate and teach new things like SR-IOV. We’re getting in 2 Dell rack servers to take over the role as Hyper-V hosts and the HP servers will become our SOFS nodes.

Both servers had 2 * dual port 10 GbE cards, giving me 4 * 10 GbE ports. One card was full height and the other modified to half height – occupying both ports in the servers. We got LSI controllers to connect the 2 servers to the JBOD. Each LSI adapter is full height and has 2 ports. Thus we needed 4 SAS cables. SOFS Node 1 connects to port 1 on each controller on the back of the JBOD, and SOFS Node 2 connects to port 2 on each controller. The DataOn manual shows you how to attach further JBODs and cable the solution if you need more disk capacity in this SOFS module.

Note that I have added these features:

- Multipath I/O: To provide MPIO for the SAS controllers. There are rumblings of performance issues with this enabled.

- Windows Standards-Based Storage Management: This provides us with with integration into the storage, e.g. SES

The Cluster

The network design is what I’ve talked about before. The on-board 1 GbE NICs are teamed for management. The servers now have a single dual port 10 GbE card. These 10 GbE NICs ARE NOT TEAMED – I’ve put them on different subnets for SMB Multichannel (a cluster requirement). That means they are simple traditional NICs, each with a different IP address. I’ve used NetQOSPolicy to do QoS for those 2 networks on a per-protocol basis. That means that SMB 3.0 and backup and cluster communications go across these two networks.

Hans Vredevoort (Hyper-V MVP colleague) went with a different approach: teaming the 10 GbE NICs and presenting team interfaces that are bound to different VLANs/subnets. In WS2012, the dynamic teaming mode will use flowlets to truly aggregate data and spread even a single data stream across the team members (physical interfaces).

Storage Spaces

The storage pool is created in Failover Clustering. While TechEd demos focused on PowerShell, you can create a tiered pool and tiered virtual disks in the GUI. PowerShell is obviously the best approach for standardization and repetitive work (such as consulting). I’ve fired up a single virtual disk so far with a nice chunk of SSD tiering and it’s performing pretty well.

First Impressions

I wanted to test quickly before the new Dell hosts come so Hyper-V is enabled on the SOFS cluster. This is a valid deployment scenario, especially for a small/medium enterprise (SME). What I have built is the equivalent (more actually) of a 2-node Hyper-V cluster with a SAS attached SAN … albeit with tiered storage … and that storage was less than half the cost of a SAN from Dell/HP. In fact, the retail price of the HDDs is around 1/3 the list price of the HP equivalent. There is no comparison.

I deployed a bunch of VMs with differential disks last night. Nice and quick. Then I pinned the parent VHD to the SSD tier and created a boot storm. Once again, nice and quick. Nothing scientific has been done and I haven’t done comparison tests yet.

But it was all simple to set up and way cheaper than traditional SAN. You can’t beat that!

At last, a good and short article showing a simple lab SOFS cluster in a small real-life lab since so far, I’ve found many articles simply hard to comprehend how it would work in real-life. I would love to see a follow-up on details of the SOFS configuration in Server Manager. For example, how does the tiering work, how do you select which VMs go to the SSDs vs 10Ks? And what about redundancy, are the SSDs redundant for eachother? Also, these JBOD trays, are they actually what some vendors call “SAS attached SAN” or something else? Does like HP have an equivalent?

LOL HP and Dell want Storage Spaces to disappear. When you’ve invested billions in acquiring things like Compellent or 3Par, having something that is designed for the cloud and a tiny percentage of the cost … that aint good 🙂 JBODs are not SAS-attached SANs. They’re cheaper again.

Tiering is automated. By default a scheduled task runs at 1am to promote 1MB slices up or down the 2 tiers. The virtual disks are fault tolerant on the disks if you use mirroring or party virtual disks, therefore what is stored on the SSDs is fault tolerant.

Thanks for clearing that up! So Windows, not the hardware storage device, looks at which 1MB slices that are “mostly used” and moves it up and down, correct? Then I can imagine they shake in their boots 🙂

Have you seen any dirt cheap JBOD disk trays? I mean something around 4-8 disks you can use for your really small lab like mine?

Nothing on the HCL. You’ll have to take your chances with untested h/w.

Hello Aidan and thanks for your work to write your blog. I`m new in SOFS but trying to catch up. From what I understand you have first your JBOD connected to your SOFS nods (old HPs) using SAS. Then from SOFS (old HPs) you have 10Gbit network connection to your Hyper-V Hosts (new DELLs) , am I correct ? Also what 10Gbit connections you use , direct SFP+ Twinax cables or you go to 10gbit switch ? Can you post complete scheme of all of these ?

Thanks again . Thumbs up !

In this lab yes … but it only makes sense to have 2 layers (storage + hosts) if you need lots of Hyper-V hosts. The cluster that is attached to the storage can be the Hyper-V cluster … the JBOD is shared storage with CSVs. In our lab, we’re running 10 GbE SFP+. I’m putting in Chelsio iWarp cards to get SMB Direct. Could just as easily be 1 GbE, which will run at least as well as iSCSI, if not slightly better.

great post aiden. we do a few P2000 SAS sans for sme’s (2-3 hosts etc) and i was thinking about this but wasn’t sure where to start.

I didn’t think that you’d be supported to run hyper-v on the scofs nodes themselves. And once you then need to purchase another 2 hosts just for the SCOFS roles the costs mount up (as does the complexity!).

Do you have a rough price for the JBOD chassis?

With disks and SAS adapters, it’s working out around 40% the cost of the equivalent SAS starter kit SAN. That improves as you scale because, comparing retail costs, the disks are down to 33% the cost of the HP/Dell equivalent.

Great post!

So does that mean the JBOD/SOFS solution could be cheaper than a SAS SAN from HP (p2000) for a 2-3 node hyper-v cluster for a sme’s?

Where do you get the dataon JBOD from? I can’t figure out on the dataon webesite where we can buy their products…

This particular JBOD will work with 2 nodes – note there are 1 and 2 ports, but not 1, 2, and 3 ports. Most 3 node clusters I see in SMEs are hugely underutilized and could be 2 nodes instead. Using this solution would be much cheaper than a SAS SAN. Any customer that needs to scale out can:

a) Use another JBOD that accepts more connections OR

b) Use 2 file servers to share the JBOD as an SOFS (the file servers are basically the controllers of a “SAN”) and install dedicated hosts as normal.

Contact DataOn directly and they should be able to sort you out.

Hmm, sounds very interesting!

Thanks for your explanations.

According to the DataON tech I spoke with, the DNS-1640 does support 3 hosts. Although the 3rd port is labeled as an expansion port, it can be used for hosts as well. At least according to them. I haven’t tested it!

Great post Aidan – why can’t you work for a UK distie???

Is there a bit missing here? “Windows Standards-Based Storage Management: This provides us with w “

I might be showing my ignorance here, but would it be possible to implement and support ODX with a JBOD and SOFS with hardware like you’ve referenced?

Would that be entirely dependant upon the JBOD controllers, or the LSI SAS adapters within the SOFS nodes?

No.

What are your comments on storage redundancy. Let’s say you build an environment with the components you just specified above and you start to think of “storage redundancy”. Looking at HP LeftHand you can add another LeftHand node and they replicate storage between them. But with 2012R2 Storage Spaces for storage redundancy. Would your recommendation be to simply setup another (seperated) identical environment and make sure all servers are redundant for each other (two DCs replicating, two Exchange in a DAG, SQL always On etc) and the few servers that are “single-server” you implemement Hyper-V replica? Or if you have such redundant reqirements, you should rather go with HP LeftHand or such?

Attach a minimum of 3 JBODs, and mirrored virtual disks will place their interleaves across the trays. Lose a tray … you keep the virtual disk alive.

Do the JBOD’s need to be directly connected to each other? Also, why do you need 3?

The JBODs are not connected together. Best practice: the SOFS nodes are directly connected to each JBOD and there is no daisy chaining. But the right JBODs and you can do this, even at great scale (over 1 PB). You don’t need 3. You can do it with 1 JBOD. But if you want to scale you need more than one, obviously 🙂 If you want tray fault tolerance then you need a min of 3 for 2-way mirroring and a min of 4 for 3-way mirroring.

Aidan,

Great article, appreciate your time.

I note at the start of the article you mention dual port hard disks.

Is this for both the 600GB disks and the SSD disks?

Are dual port hard disks required as part of a Windows Server 2012 R2 File Cluster Storage Space? (I am guessing not as, as far as I understand SATA disks are not dual port.)

Thank you again for your time.

Tom Ward

Dual channel SAS disks are required. LSI makes an “interposer” that you can place on a SATA disk but I hear that it’s not a great solution.

Aidan,

Thank you for coming back to me.

Tom Ward

I take it your plan is to utilize Storage Spaces with either parity or mirroring? Any reason you didn’t go with something like the LSI Syncro CS solution and use Hardware Raid instead? I’m still quite skeptical of Storage Space performance even with R2’s improvements allowing parity and write cache.

2-way mirror. Storage Spaces offers much more, like cheaper disks, tiered storage, Write-Back Cache, and more.

Aidan,

In your blog “Storage Spaces & Scale-Out File Server Are Two Different Things” you mentioned:

‘Note: In WS2012 you only get JBOD tray fault tolerance via 3 JBOD trays’

Is this is still true with WS2012R2 or does Microsoft now allow JBOD tray fault tolerance with 2 2-mirrored JBOD trays as one might expect?

Appreciate your blogs.

You still need 3 trays to form quorum.

Hi 🙂 The most useful guide I have seen during 2 month reading on MSN or technet.

The Jbod storage just uses 2 channel sas disks and connects both servers to same disks, thats how the filecluster is formed.

So, If I take 2 servers instead of JBOD enclosure, make VHDX and use iSCSI to connect to 2 nodes and on Cluster make Mirrored drive from both Targets, I think I will get FT storage solution, wont I?

So, 2 servers for iSCSI targets, 2 Servers for SMB Cluster, 2 servers for Hyper-v Cluster and I got a FT sollution for my VM?

thanks

No.

Can you start with a single tray and build up to quorum later?

Data will not be relocated, so no.

I was under the impression that when you added additional drives into the storage space it stripes the data across those new drives. Did I misunderstand?

You did misunderstand. Such a process slows down the storage. It doesn’t scale well.

Hi Aidan,

Just to clarify, when you talk about “tray fault-tolerance”, you’re not talking about drive fault tolerance, right? I.e., you can set up a cluster with mirrored quorum drives and CSV data drives all on the same tray, presumably with its redundant PSUs and controllers, using the dual port HDDs, right? So with tray fault-tolerance, you’re going beyond that to conditions that could take out the tray despite those redundancies?

I’m on a quest to build a 2-server fault-tolerant Hyper-V cluster. That means, so far as I can see, no SOFS, but for small sites I’d think that would be excessive anyway.

Looking at the various products, the vendor hand-waving and bafflegab makes it really hard to tell what you’re getting, but if I read this correctly, this Data-ON unit eliminates the need for a SAS switch and includes a SAS expended… right?

— Ken

Tray fault tolerance is the ability to lose a complete tray and still have your virtual disks/data.

Hi Aidan,

Just a question or request if you like, could you maybe do some blog on how to expand a currently running full setup with clustered HyperV’s and SOFS, i’m wondering cause i’v been reading up on SOFS thinking to deploy it in the new year coming and i came across a guide on technet “http://technet.microsoft.com/en-us/library/dn554251.aspx” and they state:

“This solution requires a significant hardware investment to set up for testing purposes. You can work around this by starting with a smaller solution for testing. For example, you could use a file server cluster with two nodes and two JBODs, a simpler management cluster, and fewer compute nodes. When you’re comfortable with the solution in your lab, you can add nodes and JBODs to the file server cluster, though you’ll have to recreate the storage spaces to ensure that data is stored across all enclosures with enclosure awareness support.”

I’m particular worried about the

“though you’ll have to recreate the storage spaces to ensure that data is stored across all enclosures with enclosure awareness support”

If i read that correctly they are saying that i have to recreate the whole storage space to be able to use another(ekstra) JBOD enclosure getting attached to the setup at a later point.

– Nicolaj

Correct. Storage Spaces does not move blocks around. If you intend to have 3-4 JBODs in your install, then buy them up front. Spread your initially required number of disks across the JBODs, ensuring that you have enough in each JBOD for each tier and minimum requirements for your tolerance type.

Hi Aidan,

I have read multiple articles on trying to understand how fail over resiliency works with Storage Spaces but can seem to figure out may be i am not understanding it right. We have purchased 10 SSD’s , 24 900gb SAS HDD’s and have another 6 70GB regular SAS drives that we would like to make it as part of Raid 10 between 3 JBOD’s. Currently only config we found to be working is having 4 SSD’s , 12 900GB SAS drives & 2 70GB regular drives identical on 2 JBOD’s and the third with remaining 2 SSD’s and 2 70GB drives. We did basic file copy test on SS while shutting down entire enclosure and volumes stay up no problem. But is there a better way to do the resilency then this

Each JBOD must be laid out identically.

Hey Aidan, we just setup a Windows 2012 R2 cluster using Storage Spaces with a DataOn DNS-1660. It’s fully populated with 60 – 6TB disks and working great. However, I’m curious if you’ve found a good solution for monitoring all the JBOD hardware components for failures. This is an important piece that seems to be missing, and without it, our management is a little nervous placing production data on this JBOD. I have found a powershell script on technet that provides a great report, but I’m hoping to find a way to just alert when needed. Any suggestions?

DataON have something coming soon. Microsoft has dropped the ball in this area.

In the case of using a SAS attached JBOD, does one need to go to the Administrative tool MPIO and check the box “Add support for SAS devices” ? Or is MPIO handled automatically?

Do this, yes.

Thanks Aidan. I also used some PowerShell that I found in some older posts of yours. Do you still recommend this load balance policy?

Set-MSDSMGlobalDefaultLoadBalancePolicy LB

Update-MPIOClaimedHW

shutdown /r /t 30

LB, yes.

Forgot to ask what the recommended file allocation unit size is when formatting a CSV volume for Hyper-V to use. I assume NTFS/64KB but want your opinion.

64 K

Aidan,

Your articles have been super helpful the last couple of weeks for me. I do have a question which i cannot seem to find an answer to. For my SOFS, I have three file server nodes and three JBODS.

Do i need all FS nodes to have a direct SAS link from their HBA’s to the each JBOD? Or will it be a link to two and communication to also happen over my 10Gb network as well?

Yes, each of your SOFS nodes should direct connect to each of the JBODs. Only lesser hardware does daisy chaining.

Actually, a better question to ask would be, is there a need for a third FS node? It seems that most JBODS (including the ones i have here) only have the ability for two server connections at a time.

Most do. However, at least with JBOD, that’s not true. The old 6 Gbps 60 disk model had 4 interfaces per module. The new 12 Gbps 2U model has 6 interfaces per module. The best practice from MSFT these days appears to be 1 SOFS node per JBOD. See https://aidanfinn2.wpcomstaging.com/?p=18097.

Aidan,

Love your blog. I’ve been having serious trouble finding the answer to this. Most JBODs come with SAS IN and SAS OUT.I realize SAS IN is for connecting servers while SAS out is for connecting more JBODs (daisy chaining). However when I plug in a SAS cable to the SAS out port into the server, it appears to work just fine. Is there a hardware or throughput limitation to doing this. I ask because I’d like to add a third node to the scale out file server. Thanks!

Probably not a problem. I was told that this could be done with the older 24 disk JBOD from DataON, but I never tried. And remember: daisy chaining is for chumps, like HP and Dell, that don’t design their JBODs correctly.