You’ll find much more detailed posts on the topic of creating a continuously available, scalable, transparent failover application file server cluster by Tamer Sherif Mahmoud and Jose Bareto, both of Microsoft. But I thought I’d do something rough to give you an oversight of what’s going on.

Networking

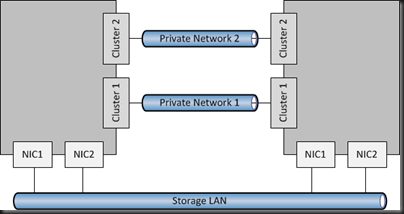

First, let’s deal with the host network configuration. The below has 2 nodes in the SOFS cluster, and this could scale up to 8 nodes (think 8 SAN controllers!). There are 4 NICs:

- 2 for the LAN, to allow SMB 3.0 clients (Hyper-V or SQL Server) to access the SOFS shares. Having 2 NICs enables multichannel over both NICs. It is best that both NICs are teamed for quicker failover.

- 2 cluster heartbeat NICs. Having 2 give fault tolerance, and also enables SMB Multichannel for CSV redirected I/O.

Storage

A WS2012 cluster supports the following storage:

- SAS

- iSCSI

- Fibre Channel

- JBOD with SAS Expander/PCI RAID

If you had SAS, iSCSI or Fibre Channel SANs then I’d ask why you’re bothering to create a SOFS for production; you’d only be adding another layer and more management. Just connect the Hyper-V hosts or SQL servers directly to the SAN using the appropriate HBAs.

However, you might be like me and want to learn this stuff or demo it, and all you have is iSCSI (either a software iSCSI like the WS2012 iSCSI target or a HP VSA like mine at work). In that case, I have a pair of NICs in each my file server cluster nodes, connected to the iSCSI network, and using MPIO.

If you do deploy SOFS in the future, I’m guessing (because we don’t know yet because SOFS is so new) that’ll you’ll mostly likely do it with a CiB (cluster in a box) solution with everything pre-hard-wired in a chassis, using (probably) a wizard to create mirrored storage spaces from the JBOD and configure the cluster/SOFS role/shares.

Note that in my 2 server example, I create three LUNs in the SAN and zone them for the 2 nodes in the SOFS cluster:

- Witness disk for quorum (512 MB)

- Disk for CSV1

- Disk for CSV2

Some have tried to be clever, creating lots of little LUNs on iSCSI to try simulate JBOD and Storage Spaces. This is not supported.

Create The Cluster

Prereqs:

- Windows Server 2012 is installed on both nodes. Both machines named and joined to the AD domain.

- In Network Connections, rename the networks according to role (as in the diagrams). This makes things easier to track and troubleshoot.

- All IP addresses are assigned.

- NIC1 and NIC2 are top of the NIC binding order. Any iSCSI NICs are bottom of the binding order.

- Format the disks, ensuring that you label them correctly as CSV1, CSV2, and Witness (matching the labels in your SAN if you are using one).

Create the cluster:

- Enable Failover Clustering in Server Manager

- Also add the File Server role service in Server Manager (under File And Storage Services – File Services)

- Validate the configuration using the wizard. Repeat until you remove all issues that fail the test. Try to resolve any warnings.

- Create the cluster using the wizard – do not add the disks at this stage. Call the cluster something that refers to the cluster, not the SOFS. The cluster is not the SOFS; the cluster will host the SOFS role.

- Rename the cluster networks, using the NIC names (which should have already been renamed according to roles).

- Add the disk (in storage in FCM) for the witness disk. Remember to edit the properties of the disk and rename if from the anonymous default name to Witness in FCM Storage.

- Reconfigure the cluster to use the Witness disk for quorum if you have an even number of nodes in the SOFS cluster.

- Add CSV1 to the cluster. In FCM Storage, convert it into a CSV and rename it to CSV1.

- Repeat step 7 for CSV2.

Note: Hyper-V does not support SMB 3.0 loopback. In other words, the Hyper-V hosts cannot be a file server for their own VMs.

Create the SOFS

- In FCM, add a new clustered role. Choose File Server.

- Then choose File Server For Scale-Out Application Data; the other option in the traditional active/passive clustered file server.

- You will now create a Client Access Point or CAP. It requires only a name. This is the name of your “file server”. Note that the SOFS uses the IPs of the cluster nodes for SMB 3.0 traffic rather than CAP virtual IP addresses.

That’s it. You now have an SOFS. A clone of the SOFS is created across all of the nodes in the cluster, mastered by the owner of the SOFS role in the cluster. You just need some file shares to store VMs or SQL databases.

Create File Shares

Your file shares will be stored on CSVs, making them active/active across all nodes in the SOFS cluster. We don’t have best practices yet, but I’m leaning towards 1 share per CSV. But that might change if I have lots of clusters/servers storing VMs/databases on a single SOFS. Each share will need permissions appropriate for their clients (the servers storing/using data on the SOFS).

Note: place any Hyper-V hosts into security groups. For example, if I had a Hyper-V cluster storing VMs on the SOFS, I’d place all nodes in a single security group, e.g. HV-ClusterGroup1. That’ll make share/folder permissions stuff easier/quicker to manage.

- Right-click on the SOFS role and click Add Shared Folder

- Choose SMB Share – Server Applications as the share profile

- Place the first share on CSV1

- Name the first share as CSV1

- Permit the appropriate servers/administrators to have full control if this share will be used for Hyper-V. If you’re using it for storing SQL files, then give the SQL service account(s) full control.

- Complete the wizard, and repeat for CSV2.

You can view/manage the shares via Server Manager under File Server. If my SOFS CAP was called Demo-SOFS1 then I could browse to \Demo-SOFSCSV1 and \Demo-SOFSCSV2 in Windows Explorer. If my permissions are correct, then I can start storing VM files there instead of using a SAN, or I could store SQL database/log files there.

As I said, it’s a rough guide, but it’s enough to give you an oversight. Have a read of the above linked posts to see much more detail. Also check out my notes from the Continuously Available File Server – Under The Hood TechEd session to learn how a SOFS works.

Your single point of failure here remains your shared storage. Why not cluster 2 servers and mirror the storage in storage spaces? Would that be an option?

Only if a third party comes up with a solution, but this might affect your support from Microsoft.

There is no MS-supported solution for mirroring (or DFS-Replication) when using SOFS. But you can build a second Hyper-V Cluster with a second MS-CSV-SOFS and use Hyper-V Replication for such a disaster recovery solution.

And some thoughts from me:

SOFS could be for me – no complex SAN, just shared SAS JBOD. Just imagine – HP c7000, up to 16 blades – and you could insert 2x 6120XG-Switches to connect all blades to your network and 2x 6GBps SAS Switches to connect to one or more storage enclosures. You could be using one enclosure for SSD, one for 15k SAS, one for 7.2k SATA – I can connect the tape library, too. So now it is up to you: 1x DPM-Host, 2x SOFS-Cluster-Hosts, 13x Hyper-V-Hosts… or for more IO just reduce the number of Hyper-V-Hosts and increase the SOFS-Hosts. And if you need a second c7000-Enclosure – some of HP Shared SAS solutions can be connected to two enclosures.

Hi Aiden,

I read your article, but i have some requirement can you please help me out.

Our requirement is that we want to deploy hyper v on two servers and these server should not use share storage like SAN or others. Each node should have its own local storage and they would replicating to each other for the hyper v VMs.Is that possible. If then let me know how can i do this.

Many thanks

Zaheer

Hyper-V Replica

Hello Aidan,

I have been reading your articles on Hyper-V and Storage Spaces. SMB and SOFS etc. I love the idea of building an affordable SAN as I do work for the Small to Medium business sector and as Server 2003 comes to end of life we are pushing on moving our customers to 2012 but also providing better failover and redundancy.

I am after a base scenario of what sort of kit I would need for a 2 or 3 node cluster all running Sever 2012 and having an additional 1 or 2 file server as the SOFS to hold the Hyper-V machines or SQL databases. And also is it possible to also use these file servers for other file shares such as User folders and other data.

Cost is a huge factor for small business and I would be keen to know your throughts on a typical budget to build this sort of solution based on having a selection of JBODs and it all configured for failover and clustering.

Many thanks

For just 2 nodes, keep it simple: JBOD + disks + SAS cables + 2 controllers … and the 2 nodes. Attach the JBOD to the Hyper-V nodes. If a small company only needs 3 nodes, then see if the JBOD can support 3 connecting servers.

For the JBOD: check the HCL. This is the 2012 HCL.

DO NOT PUT USER SHARES ON A SOFS. Put virtual file servers on the SOFS and put user shares in the virtual file server.

My lab storage solution will come in around 1/3 the price of the equivalent Dell starter kit SAN, but with twice the storage capacity.

Thanks for the quick response Aidan. That gives me a good idea on where to start and idea of cost.

One other query would be if the SOFS wasn’t an option can the 2 nodes be set up without a SAN and an iscsi target created on each to use for CSV.

I understand this is not a good scenario but for the smaller business can it be set up that way.

Thanks again

No. The iSCSI target would be on another server. But at that point, you might as well use SMB 3.0 file shares on that other server. And that brings you to a single point of failure without clustering that storage (iSCSI target does not have transparent failover).

I feel like I’m resurrecting an old thread here, but here goes!

I (like Gareth) am working with a limited budget in an SMB environment. We’re contemplating migrating away from VMware and using Hyper-V because the licensing costs are so much lower.

Here’s my question. Is it possible to use your proposal (2 servers as Hyper-V nodes + SAS cables + 2 JBODs) and then host 2 VMs on that cluster that would be the SOFS cluster? I’m looking to implement this as a testbed for our company with some low-priority file servers, and then migrating our VMs over to this Hyper-V cluster once proof-of-concept has been achieved.

Thanks for your help!

Yes. Storage Spaces is supported cluster storage. So you don’t build a SOFS cluster; instead you build a Hyper-V cluster and simply store the VMs on the CSVs – no need for SMB 3.0. Also see Cluster-in-a-Box (CiB).

I created a two node CSV SOFS with a single JBOD 6 disk cabinet (4 RSS Nics). It is used by my 4 node Hyper-V cluster via SMB share managed by VMM. Seems to work as expected but maybe a little slow writes. q1: I was wondering if I should create the share with VDI Deduplication or if that would interfere with block caching. q2: Should I use Mirrored or Parity for the Storage Pool?

– VDI deduplication is only supported for …. drum roll please … non-pooled VDI virtual machines.

– Using VDI deduplication disables CSV Cache for an enable volume. That’s not an issue because it improves performance over the long term, e.g. in a boot storm. It’s complicated to explain.

Hi,

I wanted to know if it possible to do this:

I want to build a 2 node cluster, but without a third server as FileServer.

I wanted that the fileserver is the cluster itself.

Thanks for help.

No. It’s not possible and it would over complicate things. Instead, you share a JBOD between the two cluster nodes (each server has 2 SAS connections to the JBOD), implement Storage Spaces, and use the CSV to store your VMs. Also look at CiB (cluster in a box). No SMB 3.0 required.

Hi,

I have to design a large solution – have currently not sufficient equipment to test it first. Depending on your kind comment we might go to set-up a test lab. Requirement is not large IOPS number, but one single share as big as possible:

2 nodes storage spaces cluster with 4 JBOD each with 80 disk 6TB (mirror spaces) compliant to:

•Up to 80 physical disks in a clustered storage pool (to allow time for the pool to fail over to other nodes)

•Up to four storage pools per cluster

•Up to 480 TB of capacity in a single storage pool

That gives us roughly 960TB formatted space in one CSV?

Next would be to set-up 2 or 4 nodes SoFS. And here follows most crucial question:

Is it possible to extend one single CSV over two storage spaces clusters? By that we would get rougly 1,92PB volume? Can we add even more storage spaces clusters? If yes – what would be the limit?

Thank you very much for your help.

You cannot span CSVs beyond a volume. You’re going to have 1 CSV per virtual disk. You cannot span CSVs across SOFS clusters.

You can have 8 nodes in a SOFS cluster. You could have 4 “bricks”, with each brick having 2 nodes and a set of JBODs shared just between those 2 nodes. In theory, it can be done, but not likely. People are more likely to go with the stamp approach, as seen in MSFT’s CPS – one SOFS cluster and the SMB clients (Hyper-V hosts) in a rack. Need more capacity? Start over with a new rack. That constrains failure to within the rack.

Dear Aidan, thank you for your answer – I really do appreciate that very much.

I have a question again – hopefully the last one to finish my design 🙂

Limitation of clustered storage pools:

4 storage pools per cluster * 80 disks * 6TB per disk (this seems to be a limitation)

Is that a real HW or SW limitation – or just a kind of recommendation?

When I am calculating our needs, I can easily attach 8 or even 12 or even 28 storage pools each 80 disks and would not hit NIC or HBA or PCIe bandwidth/transfer capacities per host node.

For example (I am not talking about backups) – imagine that I need a daily copy of something and I can store that exactly on 4 storage pools. But I need to have those copies 7 days long – that means I would need 28 storage pools. Since storage pools are most of the time on idle state I don’t understand why I can’t attach so many storage pools? Buying host nodes and networking while not really utilizing them is hard to justify.

Thank you very much for your help.

See here: http://social.technet.microsoft.com/wiki/contents/articles/11382.storage-spaces-frequently-asked-questions-faq.aspx#What_are_the_recommended_configuration_limits

I still find storage spaces very confusing.

is it necessary to really have SSD storage i mean in theory a 12 bay enclosure made up of 10x4TB you need approximately 10 percent ssd storage by best practice so that is 2×1.6 TB solid state drives that becomes very expensive $5000 dollars each because all drives need to be SAS

is it ssd storage really a make or break in storage spaces

i intend to run it for HYPER-V

when you create a mirrored space can you work your space out as number of disks divided by 2 like raid 10 or is it dependent on number of columns

No, you don’t need SSD storage. But if you’re going to run 7200 RPM drives in a 12 bay unit, I think you’d be mad not to put 4 x 400 GB SSDs in there. We (www.mwh.ie) sell 12 bay CiBs with 4 x 400 GB SSDs with 8 x 4TB or 6TB drives. Customers get the best of speed and economical capacity.

Hi Aidan,

I can’t seem to find any recommended hardware specs for a SOFS ?

As a test we have 5 x VMs with storage on a JBOD through a single SOFS. Can you please recommend whether it is CPU, RAM, NW cards etc that need to be a strength. Our test setup with 5 x VMs running is using 5-10% cpu and 3/16gb ram. Network is 1gb and using about 20%. This is on an old Dell 1950. Do we need to spend additional money on new hardware for our 2 SOFS or can use older HW and spend this money on our hosts?

Thanks

Tim

If you have RDMA then the CPU is lightly loaded on SOFS nodes. It only gets loaded normally during a disk repair. If you don’t have RDMA then you’ll need more CPU. A pair of E-5s will do the trick. Probably just 1 even. On RAM, Windows Server will use what you put in for caching, but even a standard machine will do the trick. I would typically have 2-4 SOFS nodes, 4 for the big 4 x 60 disk footprints, with at least 1 CSV per node.

Hi Aidan.

I have 1 JBOD with 4 SSD’s and 20 HDD’s and two nodes for SOFS.

I read that it’s best practice to have one CSV per host.

A simple question. Is it best practice to have 1 virtual disk with 2 volumes (CSV’s) or have 2 virtual disks with 1 volume (CSV) on each one?

Thanks

Thomas

1 virtual disk = 1 CSV.

Hi Aidan,

Which network should you set up your Cluster ip address that the SOFS lives on?

I have an Internal network (for domain communication)

a storage network (with 2 NICs and 2 subnets for MPIO)

a dedicated cluster network (just for cluster communication)

an out of band mangement network (just for RDPing into the servers and making changes).

I would assume it would have to be the domain network because DNS etc is all hosted on there? But the high speed storage networks is ultimately what I want my vhosts to communicate to the SOFS over?

See https://aidanfinn2.wpcomstaging.com/?p=14879

Set SMB Multichannel constraints on the hosts/SOFS nodes for the cluster networks. If you do the clustering right on the SOFS nodes (enable client comms on the cluster networks – ignore the warning about IPs,etc) then the SOFS nodes will register DNS records for the cluster networks, enabling the hosts to connect to the SOFS on the cluster networks. This design converges all the SMB 3.x goodness to those 2 NICs (not teamed) so we can take advantage of investment in 10GbE or faster and any RDMA we might have:

– Redirected IO

– SOFS traffic

– Live Migration

Is there any supported way to use locally attached storage on the hosts/nodes to have any type of redundancy or HA with where the VHDX files are stored ? All of the examples are for JBOD encolsures, etc. I just want to use my old trusty hardware raid controller.

Buy DoubleTake, etc. Or wait for WS2016.

Aiden,

When deploying Remote Desktop Services (pooled collection) on a Hyper-V host that stores the virtual machines on a SOFS, should User Profile Disks be stored directly on the SOFS share or on a standard file server running in a VM?

Thanks and kind regards,

Bob

Good question – one I’ve never been asked. I’ll have to ask about that one.

OK, the consensus appears to be to use a CA share on the SOFS.

Thank you, much appreciated.

Aiden,

Unfortunately you filled the room at ignite a little earlier than expected this year… But no worries I caught it online, great session and glad to see you posting again. Anyway I have a scenario similar the one you describe here except we are looking to utilize 2012R2.

We have a client with the following:

2x HP P2000 iSCSI SANS w/ redundant controllers each

2x File Servers cross connected to each SAN through iSCSI Running Datacore and using Datacores MPIO software (All data core is doing is mirroring the vDisk from one SAN to the other and providing transparent failover should an entire enclosure fail, and using 20GB of RAM for caching)

2x Hyper-V Servers in a cluster utilizing the Datacore Storage with an additional standalone Hyper-V server just hosting a couple VM’s on local storage.

We are looking to move them to the native windows stack and remove Datacore from the equation while providing a similar level of redundancy. My plan was to (Since Windows server doesn’t support mirroring one CSV to another I know 2016 may help in this area, and I cant use SAS JBODs and even if I did I wouldn’t have 3 enclosures to create an enclosure aware vDisk in storage spaces) was to:

Rebuild the Datacore boxes with 2012R2, create a file serve cluster, install the SOFS Role, use 1x P2000 SAN serving it to the file server cluster through multiple iSCSI connections utilizing native MPIO and creating a CSV then a continuously available SMB 3.0 share on top and serving it to the Hyper-V Cluster (I would also use Deduplication). This would host all the production VM’s (This would be the fast storage SAN).

I would then take the other SAN (With the slower storage) serve it directly to the standalone Hyper-V server (Again multiple iSCSI connections MPIO) and turn on Hyper-V replica from the cluster to the standalone and utilize this server as a DR scenario hoping to ease their concerns about not having redundancy at the SAN level with transparent failover in the event the production SAN dies (Though very unlikely, plugged into two separate UPS’s, on generators utilizing redundant switches, essentially the works).

My question is A do you see any issues with this configuration and B should I just be connecting my fast SAN directly to my hyper-v cluster. Are there any draw backs to not having that storage cluster in the middle, you mentioned CSV cache? I think they would feel best about utilizing all their current hardware even if the advantage to having that storage layer was only very small as they have the hardware and would like to use it. Thanks for your help in advance I know its somewhat of a long post!

Any chance you can diagram this? I’m getting lost in the text.