This session, presented by Claus Joergensen, Michael Gray, and Hector Linares can be found on Channel 9:

Current WS2012 R2 Scale-Out File Server

This design is known as converged (not hyper-converged). There are two tiers:

- Compute tier: Hyper-V hosts that are connected to storage by SMB 3.0 networking. Virtual machine files are stored on the SOFS (storage tier) via file shares.

- Storage tier: A transparent failover cluster that is SAS-attached to shared JBODs. The JBODs are configured with Storage Spaces. The Storage Spaces virtual disks are configured as CSVs, and the file shares that are used by the compute tier are kept on these CSVs.

The storage tier or SOFS has two layers:

- The transparent failover cluster nodes

- The SAS-attached shared JBODs that each SOFS node is (preferably) direct-connected to

System Center is an optional management layer.

Introducing Storage Spaces Direct (S2D)

Note: you might hear/see/read the term SSDi (there’s an example in one of the demos in the video). This was an old abbreviation. The correct abbreviation for Storage Spaces Direct is S2D.

The focus of this talk is the storage tier. S2D collapses this tier so that there is no need for a SAS layer. Note, though, that the old SOFS design continues and has scenarios where it is best. S2D is not a replacement – it is another design option.

S2D can be used to store VM files on it. It is made of servers (4 or more) that have internal or DAS disks. There are no shared JBODs. Data is mirrored across each node in the S2D cluster, therefore the virtual disks/CSVs are mirrored across each node in the S2D cluster.

S2D introduces support for new disks (with SAS disks still being supported):

- Low cost flash with SATA SSDs

- Better flash performance with NVMe SSDs

Other features:

- Simple deployment – no eternal enclosures or SAS

- Simpler hardware requirements – servers + network, and no SAS/MPIO, and no persistent reservations and all that mess

- Easy to expand – just add more nodes, and get storage rebalancing

- More scalability – at the cost of more CPUs and Windows licensing

S2D Deployment Choice

You have two options for deploying S2D. Windows Server 2016 will introduce a hyper-converged design – yes I know; Microsoft talked down hyper-convergence in the past. Say bye-bye to Nutanix. You can have:

- Hyper-converged: Where there are 4+ nodes with DAS disks, and this is both the compute and storage tier. There is no other tier, no SAS, nothing, just these 4+ servers in one cluster, each sharing the storage and compute functions with data mirrored across each node. Simple to deploy and MSFT thinks this is a sweet spot for SME deployments.

- Converged (aka Private Cloud Storage): The S2D SOFS is a separate tier to the compute tier. There are a set of Hyper-V hosts that connect to the S2D SOFS via SMB 3.0. There is separate scaling between the compute and storage tiers, making it more suitable for larger deployments.

Hyper-convergence is being tested now and will be offered in a future release of WS2016.

Choosing Between Shared JBODs and DAS

As I said, shared JBOD SOFS continues as a deployment option. In other words, an investment in WS2012 R2 SOFS is still good and support is continued. Node that shared JBODs offer support for dual parity virtual disks (for archive data only – never virtual machines).

S2D adds support for the cheapest of disks and the fastest of disks.

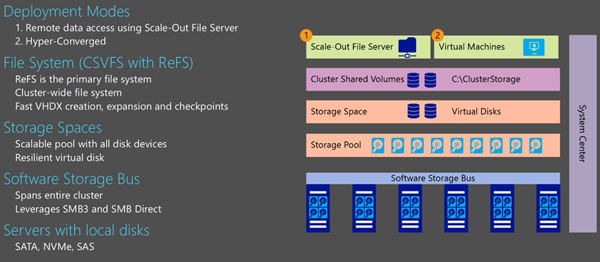

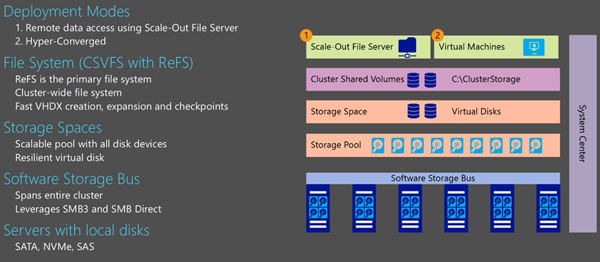

Under The Covers

This is a conceptual, not an architectural, diagram.

A software storage bus replaces the SAS shared infrastructure using software over an Ethernet channel. This channel spans the entire S2D cluster using SMB 3.0 and SMB Direct – RDMA offers low latency and low CPU impact.

On top of this bus that spans the cluster, you can create a Storage Spaces pool, from which you create resilient virtual disks. The virtual disk doesn’t know that it’s running on DAS instead of shared SAS JBOD thanks to the abstraction of the bus.

File systems are put on top of the virtual disk, and this is where we get the active/active CSVs. The file system of choice for S2D is ReFS. This is the first time that ReFS is the primary file system choice.

Depending on your design, you either run the SOFS role on the S2D cluster (converged) or you run Hyper-V virtual machines on the S2D cluster (hyper-converged).

System Center is an optional management layer.

Data Placement

Data is stored in the form of extents. Each extent is 1 GB in size so a 100 GB virtual disk is made up of 100 extents. Below is an S2D cluster of 5 nodes. Note that extents are stored evenly across the S2D cluster. We get resiliency by spreading data across each node’s DAS disks. With 3-way mirroring, each extent is stored on 3 nodes. If one node goes down, we still have 2 copies, from which data can be restored onto a different 3rd node.

Note: 2-way mirroring would keep extents on 2 nodes instead of 3.

Extent placement is rebalanced automatically:

- When a node fails

- The S2D cluster is expanded

How we get scale-out and resiliency:

- Scale-Out: Spreading extents across nodes for increased capacity.

- Resiliency: Storing duplicate extents across different nodes for fault tolerance.

This is why we need good networking for S2D: RDMA. Forget your 1 Gbps networks for S2D.

Scalability

- Scaling to large pools: Currently we can have 80 disks in a pool. in TPv2 we can go to 240 disks but this could be much higher.

- The interconnect is SMB 3.0 over RDMA networking for low latency and CPU utilization

- Simple expansion: you just add a node, expand the pool, and the extents are rebalanced for capacity … extents move from the most filled nodes to the most available nodes. This is a transparent background task that is lower priority than normal IOs.

You can also remove a system: rebalance it, and shrink extents down to fewer nodes.

Scale for TPv2:

- Minimum of 4 servers

- Maximum of 12 servers

- Maximum of 240 disks in a single pool

Availability

S2D is fault tolerance to disk enclosure failure and server failure. It is resilient to 2 servers failing and cluster partitioning. The result should be uninterrupted data access.

Each S2D server is treated as the fault domain by default. There is fault domain data placement, repair and rebalancing – means that there is no data loss by losing a server. Data is always placed and rebalanced to recognize the fault domains, i.e. extents are never stored in just a single fault domain.

If there is a disk failure, there is automatic repair to the remaining disks. The data is automatically rebalanced when the disk is replaced – not a feature of shared JBOD SOFS.

If there is a temporary server outage then there is a less disruptive automatic data resync when it comes back online in the S2D cluster.

When there is a permanent server failure, the repair is controlled by the admin – the less disruptive temporary outage is more likely so you don’t want rebalancing happening then. In the event of a real permanent server loss, you can perform a repair manually. Ideally though, the original machine will come back online after a h/w or s/w repair and it can be resynced automatically.

ReFS – Data Integrity

Note that S2D uses ReFS (pronounced as Ree-F-S) as the file system of choice because of scale, integrity and resiliency:

- Metadata checksums protect all file system metadata

- User data checksums protect file data

- Checksum verification occurs on every read of checksum-protected data and during periodic background scrubbing

- Healing of detected corruption occurs as soon as it is detected. Healthy version is retrieved from a duplicate extent in Storage Spaces, if available; ReFS uses the healthy version to get Storage Spaces to repair the corruption.

No need for chkdsk. There is no disruptive offline scanning in ReFS:

- The above “repair on failed checksum during read” process.

- Online repair: kind of like CHKDSK but online

- Backups of critical metadata are kept automatically on the same volume. If the above repair process fails then these backups are used. So you get the protection of extent duplication or parity from Storage Spaces and you get critical metadata backups on the volume.

ReFS – Speed and Efficiency

Efficient VM checkpoints and backup:

- VHD/X checkpoints (used in file-based backup) are cleaned up without physical data copies. The merge is a metadata operation. This reduces disk IO and increases speed. (this is clever stuff that should vastly improve the disk performance of backups).

- Reduces the impact of checkpoint-cleanup on foreground workloads. Note that this will have a positive impact on other things too, such as Hyper-V Replica.

Accelerated Fixed VHD/X Creation:

- Fixed files zero out with just a metadata operation. This is similar to how ODX works on some SANs

- Much faster fixed file creation

- Quicker deployment of new VMs/disks

Yay! I wonder how many hours of my life I could take back with this feature?

Dynamic VHDX Expansion

- The impact of the incremental extension/zeroing out of dynamic VHD/X expansion is eliminated too with a similar metadata operation.

- Reduces the impact too on foreground workloads

Demo 1:

2 identical VMs, one on NTFS and one on ReFS. Both have 8 GB checkpoints. Deletes the checkpoint from the ReFS VM – The merge takes about 1-2 seconds with barely any metrics increase in PerfMon (incredible improvement). Does the same on the NTFS VM and … PerfMon shows way more activity on the disk and the process will take about 3 minutes.

Demo 2:

Next he creates 15 GB fixed VHDX files on two shares: one on NTFS and one on ReFS. ReFS file is created in less than a second while the previous NTFS merge demo is still going on. The NTFS file will take …. quite a while.

Demo 3:

Disk Manager open on one S2D node: 20 SATA disks + 4 Samsung NVMe disks. The S2D cluster has 5 nodes. There is a total of 20 NVMe devices in the single pool – a nice tidy aggregation of PCIe capacity. The 5 node is new so no rebalancing has been done.

Lots of VMs running from a different tier of compute. Each VM is running DiskSpd to stress the storage. But distributed Storage QoS is limiting the VMs to 100 IOPs each.

Optimize-StoragePool –FriendlyName SSDi is run to bring the 5th node into the cluster (called SSDi) online by rebalancing. Extents are remapped to the 5th node. The system goes full bore to maximize IOPS – but note that “user” operations take precedence and the rebalancing IOPS are lower priority.

Storage Management in the Private Cloud

Management is provided by SCOM and SCVMM. This content is focused on S2D, but the management tools also work with other storage options:

- SOFS with shared JBOD

- SOFS with SAN

- SAN

Roles:

- VMM: bare-metal provisioning, configuration, and LUN/share provisioning

- SCOM: Monitoring and alerting

- Azure Site Recovery (ASR) and Storage Replica: Workload failover

Note: You can also use Hyper- Replica with/without ASR.

Demo:

He starts the process of bare-metal provisioning a SOFS cluster from VMM – consistent with the Hyper-V host deployment process. This wizard offers support for DAS or shared JBOD/SAN; this affects S2D deployment and prevents unwanted deployment of MPIO. You can configure existing servers or deploy a physical computer profile to do a bare-metal deployment via BMCs in the targeted physical servers. After this is complete, you can create/manage pools in VMM.

File server nodes can be added from existing machines or bare-metal deployment. The disks of the new server can be added to the clustered Storage Spaces pool. Pools can be tiered (classified). Once a pool is created, you can create a file share – this provisions the virtual disk, configures CSV, and sets up the file system for you – lots of automation under the covers. The wizard in VMM 2016 includes resiliency and tiering.

Monitoring

Right now, SCOM must do all the work – gathering a data from a wide variety of locations and determining health rollups. There’s a lot of management pack work there that is very hardware dependent and limits extensibility.

Microsoft reimagined monitoring by pushing the logic back into the storage system. The storage system determines health of the storage system. Three objects are reported to monitoring (PowerShell, SCOM or 3rd party, consumable through SMAPI):

- The storage system: including node or disk fails

- Volumes

- File shares

Alerts will be remediated automatically where possible. The system automatically detects the change of health state from error to healthy. Updates to external monitoring takes seconds. Alerts from the system include:

- Urgency

- The recommended remediation action

Demo:

One of the cluster nodes is shut down. SCOM reports that a node is missing – there isn’t additional noise about enclosures, disks, etc. The subsystem abstracts that by reporting the higher error – that the server is down. The severity is warning because the pool is still online via the rest of the S2D cluster. The priority is high because this server must be brought back online. The server is restarted, and the alert remediates automatically.

Hardware Platforms

Storage Spaces/JBODs has proven that you cannot use just any hardware. In my experience, DataON stuff (JBOD, CiB, HGST SSD and Seagate HDD) is reliable. On the other hand, SSDs by SanDisk are shite, and I’ve had many reports of issues with Intel and Quanta Storage Spaces systems.

There will be prescriptive configurations though partnerships, with defined platforms, components, and configuration. This is a work in progress. You can experiment with Generation 2 VMs.

S2D Development Partners

I really hope that we don’t see OEMs creating “bundles” like they did for pre-W2008 clustering that cost more than the sum of the otherwise-unsupported individual components. Heck, who am I kidding – of course they will do that!!! That would be the kiss of death for S2D.

FAQ

The Importance of RDMA

Demo Video:

They have two systems connected to a 4 node S2D cluster, with a sum total of 1.2 million 4K IOPS with below 1 millisecond latency, thanks to (affordable) SATA SSDs and Mellanox ConnectX-3 RDMA networking (2 x 40 Gbps ports per client). They remove RDMA from each client system. IOPS is halved and latency increases to around 2 milliseconds. RDMA is what enables low latency and low CPU access to the potential of the SSD capacity of the storage tier.

Hint: the savings in physical storage by using S2D probably paid for the networking and more.

Questions from the Audience

- DPM does not yet support backing up VMs that are stored on ReFS.

- You do not do SMB 3.0 loopback for hyper-convergence. SMB 3.0 is not used … Hyper-V just stores the VMs on the local CSVs of the S2D cluster.

- There is still SMB redirecting in the converged scenario, A CSV is owned by a node, with CSV ownership balancing. When host connects to a share, it is redirected to the owner of the CSV, therefore traffic should be balanced to the separate storage tier.

- In hyper-convergence, the VM might be on node A and the CSV owner might be on another node, with extents all over the place. This is why RDMA is required to connect the S2D nodes.

- Which disk with the required extents do they read from? They read from the disk with the shorted queue length.

- Yes, SSD tiering is possible, including write-back cache, but it sounds like more information is yet to be released.

- They intend to support all-flash systems/virtual disks