Microsoft released a new update rollup to replace the very broken and costly (our time = our money) June rollup, KB3161606. These issues affected Hyper-V on Windows 8.1 and Windows Server 2012 R2 (WS2012 R2).

It’s sad that I have to write this post, but, unfortunately, untested updates are still being released by Microsoft. This is why I advise that updates are delayed by 2 months.

In the case of the issues in the June 2016 update rollup, the fixes are going to require human effort … customers’ human effort … and that means customers are paying for issues caused by a supplier. I’ll let you judge what you think of that (feel free to comment below).

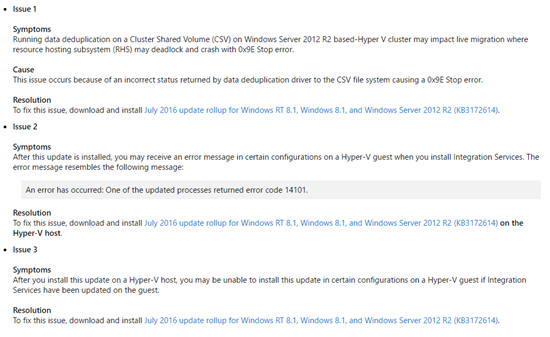

A month after news of the issues in the update became known (the update rollup was already in the wild for a week or two), Microsoft has issued a superseding update that will fix the issues. At the same time, they finally publicly acknowledge the issues in the June update:

So it took 1.5 months, from the initial release, for Microsoft to get this update right. That’s why I advise a 2 month delay on approving/deploying updates, and I continue to do so.

What Microsoft needs to fix?

- Change the way updates are created/packaged. This problem has been going on for years. Support are not good at this stuff, and it needs to move into the product groups.

- Microsoft has successfully reacted to market pressure by making a special emphasis to change, e.g. The Internet, secure coding, The Cloud. Satya Nadella needs to do the same for quality assurance (QA), something that I learned in software engineering classes was as important as the code. I get that edge scenarios are hard to test, but installing/upgrading ICs in a Hyper-V guest OS is hardly a rare situation.

- Start communicating. Put your hands up publicly, and say “mea culpa”, show what went wrong and follow it up with progress reports on the fix.

“Support are not good at this stuff, and it needs to move into the product groups.” Not sure what makes you think this is a ‘support’ issue. I am pretty sure it is the product engineers that are creating and testing the patch. But it does make sense for patches to be distributed by a centralized support organization instead of each product group having individual releases. My understanding is that before the patch is released, support does test it. But, as with any code fix, mistakes can occur. Have you never implemented a ‘fix’ that didn’t create other issues? Interestingly, I never had a problem. I updated my 2012 R2 systems and VMs and never ran into this problem, so I don’t think it was a blanket problem. I actually updated after I was aware of the issue because I wanted to see it myself, and then I did not have the issue.

“That’s why I advise a 2 month delay on approving/deploying updates, and I continue to do so.” I would suggest that instead of delaying two months for approving/deploying updates, that one should first perform testing within their own environment to approve it before it is deployed. This best practice is followed in most large shops I have ever worked with. Maybe the smaller companies should start following this practice. It does not take much to set up a WSUS (or some other similar tool) server from which a subset of servers (hopefully lab machines) can be tested before approving to the distribution to the rest of the organization. Putting a blanket delay of two months on patches means that when critical security patches are released, you are leaving your environment vulnerable for another two months.

Support make the updates, not product groups. This is documented publicly by the media that have interviewed management after previous patching catastrophes.

So, did you test your car before you put it into production? What about your washing machine? You assume that businesses are accidental software testing companies. Nope – and IT usually has more important things to do.

Just wanted to confirm that 3172614 fixed our CSV deadlocking issues. Worst part is that I had a ticket open for 3-weeks with Microsoft and they didn’t know about these issues even though @aidan had already blogged about them. I missed the CSV deadlock part because I didn’t read the comments. Kicking myself right now.

We were previously on a 1-month delay of updates because that was easy to do with CAU. Any advice on how to handle a 2-month delay with CAU/WSUS @aidan?

The product groups & support people working on updates must not have talked to the customer-facing Support people :\

If you deploy WSUS then you can take manual control over updates, and get CAU to pull from WSUS. It requires manual approval, rather than some clever rules … unless there’s a clever way to PowerShell it (which there might be).

“did you test your car before you put it into production?”

Absolutely! I don’t recall ever purchasing a car without first taking it out for a test drive.

“You assume that businesses are accidental software testing companies. Nope – and IT usually has more important things to do.”

More important than to ensure what gets installed into production fits their environment? I don’t think so. Trying to extend your own analogy, do you put in any new piece of software without first giving it a test? My guess is not. A patch is nothing more than a new piece of software. As I said in my first reply, every large organization I have ever worked with tests patches in their lab before deploying it across the enterprise. Without that testing, they don’t know if it is going to have an adverse effect on some critical application they have running. And there is no way Microsoft can test against every combination of hardware/software that all their customers are running.

I am not trying to defend Microsoft for the mistake they made with that last patch. But it happens. Did they do it purposely? I seriously doubt it. Have they learned from this? My guess is they have, and they will work to prevent it in the future. Will it happen again? Do humans make mistakes? Yes.

Again, I would hazard a guess that you have, sometime in your career, released a piece of software that broke when put into production. If you haven’t, congratulations – you are the first person I have met in my 40+ years in this business to have not done so.

“Have they learned from this?”

Microsoft hasn’t learned since 2012 … lots of such issues have impacted Windows Server & System Center. I didn’t just pull my 2 month recommendation out of my ass 🙂

Aidan,

I manage a small 2012R2 RDS Session Host farm for our Business College, 5-2012R2 H-V nodes in a failover cluster and 9-2012R2 nodes in the production farm plus a test “simple” collection and a staging simple collection. There is sufficient over-capacity in the summer sessions that I can pause a cluster node and drain 3 session hosts to test stuff that might escape detection in the 1-node simple collections. I have installed the July Rollup in the cluster in stages, finishing the rollout last night, ad installed the rollup on Test and Stage. I have experienced no issues with the rollup. I have no failures when updating the integration components, although one node, the Stage node, did require a second reboot after updating before I could RDP into the thing over the network. I’m going to start a staged rollout of the July Rollup to the production session hosts today. This is a pretty simple environment, but – so far, so good.

For what it’s worth.

Loel

Further remarks on IC’s update anomalies:

About 3/4 of the Session Hosts in the RDS cluster described above exhibited the behavior of the Staging server, that is, I was unable to log in via RDP after rebooting to apply the update. In each case, I repaired the fault by either using the “Connect…” dialogue in HV Manager or FoCM to log in to a console session and reboot the VM -OR- using the “Shut Down…” dialogue and then restarting the VM. In all cases, even when I was unable to RDP into a VM I was able to ping the VM. Has anyone else seen this behavior? I have not tested this outside of my RDS environment yet; is this unique to RDS Session Hosts?

TIA,

Loel

Hi Aidan,

I fully agree with you. When you look at the fix list for the July update, these issues are not listed as fixed, which makes it even worse.

In the last couple of months, Microsoft have been releasing broken updates every patch round, also including the security / critical onces. Some issues disappear magically after a next update, but support is not able to explain why. I can agree with you waiting for 2 months, but unfortunately, some updates also fix pressing uses. Its always taking a calculated risk.