Why did Microsoft call the “highly file server for application data” the Scale-Out File Server (SOFS)? The reason might not be obvious unless you have lots of equipment to play with … or you cheat by using WS2012 R2 Hyper-V Shared VHDX as I did on Tuesday afternoon ![]()

The SOFS can scale out in 3 dimensions.

0: The Basic SOFS

Here we have a basic example of a SOFS that you should have seen blogged about over and over. There are two cluster nodes. Each node is connected to shared storage. This can be any form of supported storage in WS2012/R2 Failover Clustering.

1: Scale Out The Storage

The likely bottleneck in the above example is the disk space. We can scale that out by attaching the cluster nodes to additional storage. Maybe we have more SANs to abstract behind SMB 3.0? Maybe we want to add more JBODs to our storage pool, thus increasing capacity and allowing mirrored virtual disks to have JBOD fault tolerance.

I can provision more disks in the storage, add them to the cluster, and convert them into CSVs for storing the active/active SOFS file shares.

2: Scale Out The Servers

You’re really going to have to have a large environment to do this. Think of the clustered nodes as SAN controllers. How often do you see more than 2 controllers in a single SAN? Yup, not very often (we’re excluding HP P4000 and similar cos it’s weird).

Adding servers gives us more network capacity for client (Hyper-V, SQL Server, IIS, etc) access to the SOFS, and more RAM capacity for caching. WS2012 allows us to use 20% of RAM as CSV Cache and WS2012 R2 allows us to use a whopping 80%!

3: Scale Out Using Storage Bricks

GO back to the previous example. There you saw a single Failover Cluster with 4 nodes, running the active/active SOFS cluster role. That’s 2-4 nodes + storage. Let’s call that a block, named Block A. We can add more of these blocks … into the same cluster. Think about that for a moment.

EDIT: When I wrote this article I referred to each unit of storage + servers as a block. I checked with Claus Joergensen of Microsoft and the terms being used in Microsoft are storage bricks or storage scale units. So wherever you see “block” swap in storage brick or storage scale unit.

I’ve built it and it’s simple. Some of you will overthink this … as you are prone to do with SOFS.

What the SOFS does is abstract the fact that we have 2 blocks. The client servers really don’t know; we just configure them to access a single namespace called \Demo-SOFS1 which is the CAP of the SOFS role.

The CSVs that live in Block A only live in Block A, and the CSVs that live in Block B only live in Block B. The disks in the storage of Block A are only visible to the servers in Block A, and the same goes for Block B. The SOFS just sorts out who is running what CSV and therefore knows where share responsibility is. There is a single SOFS role in the entire cluster, therefore we have the single CAP and UNC namespace. We create the shares in Block A in the same place as we create them for Block B .. in that same single SOFS role.

A Real World Example

I don’t have enough machinery to demo/test this so I fired up a bunch of VMs on WS2012 R2 Hyper-V to give it a go:

- Test-SOFS1: Node 1 of Block A

- Test-SOFS2: Node 2 of Block A

- Test-SOFS3: Node 1 of Block B

- Test-SOFS4: Node 2 of Block B

All 4 VMs are in a single guest cluster. There are 3 shared VHDX files:

- BlockA-Disk1: The disk that will store CSV1 for Block A, attached to Test-SOFS1 + Test-SOFS2

- BlockB-Disk1: The disk that will store CSV1 for Block B, attached to Test-SOFS3 + Test-SOFS4

- Witness Disk: The single witness disk for the guest cluster, attached to all VMs in the guest cluster

Here are the 4 nodes in the single cluster that make up my logical Blocks A (1 + 2) and B (3 + 4). There is no “block definition” in the cluster; it’s purely an architectural concept. I don’t even know if MSFT has a name for it.

Here are the single witness disk and CSVs of each block:

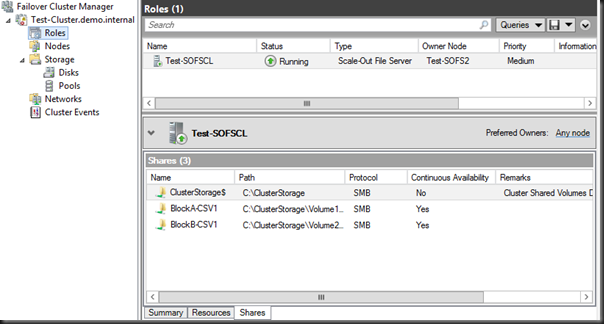

Here is the single active/active SOFS role that spans both blocks A and B. You can also see the shares that reside in the SOFS, one on the CSV in Block A and the other in the CSV in Block B.

And finally, here is the end result; the shares from both logical blocks in the cluster, residing in the single UNC namespace:

It’s quite a cool solution.

Does the validation wizard complain because your storage is not attached to all nodes?

Good question!

I just double-checked that in the lab. On the configured cluster: all green & no warnings. I destroyed the cluster and tested as if starting from new. Once again, all green and no warnings. So no, validation does not care about the disks being constrained within a “storage brick”.

Hi Aidan, thanks for all the massive knowledge in your Blog.

The example you describe is very sophisticated in the first place, and we actually would like to do something similar. But. If scaling out this way means, that with every “brick” or “block” you add, you create a new SMB Share name, the scaling effect of using the share name f.e. for automated deployment is gone, cos every time you add bricks, you would have to adapt scripts and stuff to the new share path?

Yes, you would create shares. Just use a consistent naming standard. This is no different to creating LUNs in a SAN, so I don’t see a problem. And if you’re using VMM then you create/manage the shares in VMM, and it automatically sees it as new storage on the assigned hosts/cluster that you can place VMs onto.