When you are done reading this post, then see the update that I added for SMB Live Migration on Windows Server 2012 R2 Hyper-V.

Unless you’ve been hiding under a rock for the last 18 months, you might know that Windows Server 2012 (WS2012) Hyper-V (and IIS and SQL Server) supports storing content (such as virtual machines) on SMB 3.0 (WS2012) file servers (and scale-out file server active/active clusters). The performance of this stuff goes from matching/slightly beating iSCSI on 1 GbE, to crushing fiber channel on 10 GbE or faster.

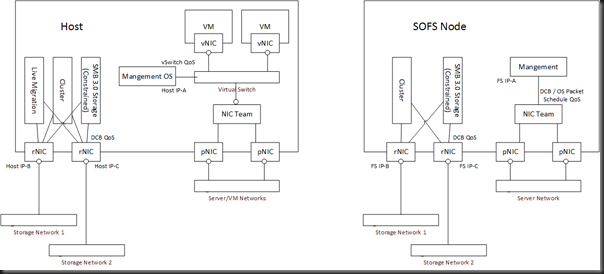

Big pieces of this design are SMB Multichannel (think simple, configuration free & dynamic MPIO for SMB traffic) and SMB Direct (RDMA – low latency and CPU impact with non-TCP SMB 3.0 traffic). How does one network this design? RDMA is the driving force in the design. I’ve talked to a lot of people about this topic over the last year. They normally over think the design, looking for solutions to problems that don’t exist. In my core market, I don’t expect lots of RDMA and Infiniband NICs to appear. But I thought I’d post how I might do a network design. iWarp was in my head for this because I’m hoping I can pitch the idea for my lab at the office. ![]()

On the left we have 1 or more Hyper-V hosts. There are up to 64 nodes in a cluster, and potentially lots of clusters connecting to a single SOFS – not necessarily 64 nodes in each!

On the right, we have between 2 and 8 file servers that make up a Scale-Out File Server (SOFS) cluster with SAS attached (SAN or JBOD/Storage Spaces) or Fiber Channel storage. More NICs would be required for iSCSI storage for the SOFS, probably using physical NICs with MPIO.

There are 3 networks in the design:

- The Server/VM networks. They might be flat, but in this kind of design I’d expect to see some VLANs. Hyper-V Network Virtualization might be used for the VM Networks.

- Storage Network 1. This is one isolated and non-routed subnet, primarily for storage traffic. It will also be used for Live Migration and Cluster traffic. It’s 10 GbE or faster and it’s already isolated so it makes sense to me to use it.

- Storage Network 2. This is a second isolated and non-routed subnet. It serves the same function as Storage Network 2.

Why 2 storage networks, ideally on 2 different switches? Two reasons:

- SMB Multichannel: It requires each multichannel NIC to be on a different subnet when connecting to a clustered file server, which includes the SOFS role.

- Reliable cluster communications: I have 2 networks for my cluster communications traffic, servicing my cluster design need for a reliable heartbeat.

The NICs used for the SMB/cluster traffic are NOT teamed. Teaming does not work with RDMA. Each physical rNIC has it’s own IP address for the relevant (isolated and non-routed) storage subnet. These NICs do not go through the virtual switch so the easy per-vNIC QoS approach I’ve mostly talked about is not applicable. Note that RDMA is not TCP. This means that when an SMB connection streams data, the OS packet scheduler cannot see it. That rules out OS Packet Scheduler QoS rules. Instead, you will need rNICs that support Datacenter Bridging (DCB) and your switches must also support DCB. You basically create QoS rules on a per-protocol-basis and push them down to the NICs to allow the hardware (which sees all traffic) to apply QoS and SLAs. This also has a side effect of less CPU utilization.

Note: SMB traffic is restricted to the rNICs by using the constraint option.

In the host(s), the management traffic does not go through the rNICs – they are isolated and non-routed. Instead, the Management OS traffic (monitoring, configuration, remote desktop, domain membership, etc) all goes through the virtual switch using a virtual NIC. Virtual NIC QoS rules are applied by the virtual switch.

In the SOFS cluster nodes, management traffic will go through a traditional (WS2012) NIC team. You probably should apply per-protocol QoS rules on the management OS NIC for things like remote management, RDP, monitoring, etc. OS Packet Scheduler rules will do because you’re not using RDMA on these NICs and this is the cheapest option. Using DCB rules here can be done but it requires end-to-end (NIC, switch, switch, etc, NIC) DCB support to work.

What about backup traffic? I can see a number of options. Remember: with SMB 3.0 traffic, the agent on the hosts causes VSS to create a coordinated VSS snapshot, and the backup server retrieves backup traffic from a permission controlled (Backup Operators) hidden share on the file server or SOFS (yes, your backup server will need to understand this).

- Dual/Triple Homed Backup Server: The backup server will be connected to the server/VM networks. It will also be connected to one or both of the storage networks, depending on how much network resilience you need for backup, and what your backup product can do. A QoS (DCB) rule(s) will be needed for the backup protocol(s).

- A dedicated backup NIC (team): A single (or teamed) physical NIC (team) will be used for backup traffic on the host and SOFS nodes. No QoS rules are required for backup traffic because it is alone on the subnet.

- Create a backup traffic VLAN, trunk it through to a second vNIC (bound to the VLAN) in the hosts via the virtual switch. Apply QoS on this vNIC. In the case of the SOFS nodes, create a new team interface and bind it to the backup VLAN. Apply OS Packet Scheduler rules on the SOFS nodes for management and backup protocols.

With this design you get all the connectivity, isolation, and network path fault tolerance that you might have needed with 8 NICs plus fiber channel/SAS HBAs, but with superior storage performance. QoS is applied using DCB to guarantee minimum levels of service for the protocols over the rNICs.

In reality, it’s actually a simple design. I think people over think it, looking for a NIC team or protocol connection process for the rNICs. None of that is actually needed. You have 2 isolated networks, and SMB Multichannel figures it out for itself (it makes MPIO look silly, in my opinion).

The networking chapter of Windows Server 2012 Hyper-V Installation And Configuration Guide goes from the basics through to the advanced steps of understanding these concepts and implementing them:

Hi Aidan,

Possibly a daft question but does rNIC = RDMA NIC?

Have a copy of your book on my desk at work but haven’t had time to dive into it properly yet!

Thanks,

Chris.

Correct.

How are you creating multiple vNics on the rNics ? Or is it just multiple vIPs on them ?

1 IP each. Configuring QoS per protocol on the rNICs.

I have a huge question that perhaps you can help me with. I have a NetApp storage which supports SMB 3.0 natively. I know that, traditionally, to obtain Zero-downtime VMs in the event of host failure I need a Scale-out File Server (Preferably cluster) between my storage and my Hyper-V3 hosts.

The Hyper-v hosts (3 in total) would be in a Hyper-V cluster configuration connecting to the SOFS cluster via RDMA capable 10GB interfaces (6 per Hyper-V host). With two additional RDMA capable 10GB for client to VM connections.

I just wanted to know if I still need the SOFS cluster if my storage supports SMB 3.0 natively.

Any guidance would be greatly appreciated.

I honestly can’t comment on the NetApp solution, other than some of their VM migration/conversion stuff is REALLY cool. SMB 3.0 is the best way to connect to storage but I don’t know how NetApp does it. The benefit of SOFS is that it does abstract the physical storage, and it’s adding layers of manageability and caching. But beyond that, I can’t comment any more …. sorry! Maybe someone else wants to chip in on NetApp SMB 3.0?

In your expert opinion, and I do mean expert, does my approach provide the best performance from SOFS to storage, Hyper-v host to SOFTS and clients to VM?

In my experience, even without RDMA, Host-SOFS-Storage beats Host-Storage … with the same back end storage. But I have never worked with SMB 3.0 natively on a SAN.

One last question, I ready your related posts above and unfortunately I am not at the level of expertise as many of you but MCIO using RDMA capable adapters can or cannot be used to create clustered VMs with requirement for pass-thru-disks?

I don’t know what you mean.

BTW. I purchased both of your books for Kindle and paperback.

Thank you!

Suppose you only have 2 ports of traditional 10GbE, not rNICs, on each SOFS node. How would you handle the networking for this? Would you team the two ports and create VLANs? If so, how many VLANs? I want to keep my cabling simple and create a converged fabric.

I won’t have SMB Direct, but I’ll have SMB Multichannel.

VLANs:

Management

Cluster1

Cluster2

Am I on the right track?

If I only had 2 x 10 GbE, and NO other NICs of any kind, I’d team them, connect the virtual switch, and create virtual management OS NICs for the host/cluster/LM functions.

“Note that RDMA is not TCP. This means that when an SMB connection streams data, the OS packet scheduler cannot see it.”

I don’t think this statement is entirely true. There is a flavor of RMDA that is TCP/IP based called iWarp. It’s even routable and the advantage it has over Infiniband and RoCE is you don’t need special switches. A standard 10Gb/s switch will do. As far as the second portion, the reason the OS packet schedule cannot see it is because of how RDMA works. Think about it, if the NetAdapter is directly accessing memory how would the OS intervene? It’s the same reason you can’t have a virtual switch on that adapter and RMDA. If you add something, then it’s no longer direct and more than likely CPU cycles are involved (so much for offloading CPU).

For QoS, I know the Chelsio iWarp cards are able to perform QoS with their traffic management but that happens on the card.

Joe

Do you use different vlans for the two different storage networks? How about roce v1 or v2?

2 subnets are required for SMB Multichannel over 2 NICs when dealing with a SOFS – it’s a requirement of clustering. I would go ROCE v2.

Adian,

I’m going nutz trying to replicate your design, but having terrible performance.

In your diagram, you clearly specify having the cluster on the rNIC side, but I’m at a total loss in verifying that the communication stays on that side! I think my communication is happening on the pNIC side. – What’s the process to configure it like you’ve diagrammed?

Design it like above, configure SMB Multichannel constraints, and use PerfMon to verify. Regarding performance: some h/w is reportedly shite: e.g. Intel JBOD, Quanta, SanDISK SSD, and so on.

Hi Aidan,

Went to your session at Ignite in Chicago a couple weeks ago. One of the best ones at the show!

I am building your exact design in this blog, with Chelsio iWarp rNICs. Q: Should my rNICs have DNS configured? If I do configure DNS on these rNICs, then DNS registration works as expected for the CNO SOFS cluster resource. But of course my File Server hosts also get IPs associated with their hostnames, which kinda kills management of them since those rNICs are not routable. What am I missing? Sorry for the rather basic question! Thank you!

Yes, you do assign DNS settings to the rNICs, and you enable client communications for the associated cluster networks. I’ve never experienced a management issue.

Thank you very much! By management issues I mean the two file server hosts in the SOFS cluster get DNS addresses registered to them that are from the rNICs, so those IPs are not routable. In my lab, my management clients (domain controllers, SCOM, etc.), depending on which IP is returned by DNS, can or cannot access the hosts by that returned IP. For example, if I ping one of the hosts, I may or may not get a management IP returned, so the ping may or may not be successful. Doesn’t this create issues for group policy, AD, etc.? Or am I missing something entirely? Thank you once again!

What I’m really struggling with is this: How can I prevent the rNIC IPs from getting registered to the hosts themselves? One of the very basic health tests I do is ping the hostnames of the SOFS hosts. These ping responses are failing anytime a rNIC IP is returned. Thank you once again! You’re a great asset to the community!