Notes taken from TechEd NA 2012 session WSV310:

Volume Platform for Availability

Huge amount of requests/feedback from customers. MSFT spent a year focusing on customer research (US, Germany, and Japan) with many customers of different sizes. Came up with Continuous Availability with zero data loss transparent failover to succeed High Availability.

Targeted Scenarios

- Business in a box Hyper-V appliance

- Branch in a box Hyper-V appliance

- Cloud/Datacenter high performance storage server

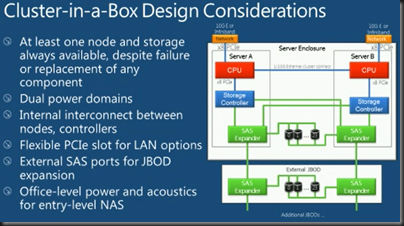

What’s Inside A Cluster In A Box?

It will be somewhat flexible. MSFT giving guidance on the essential components so expect variations. MSFT noticed people getting cluster networking wrong so this is hardwired in the box. Expansion for additional JBOD trays will be included. Office level power and acoustics will expand this solution into the SME/retail/etc.

Lots of partners can be announced and some cannot yet:

- HP

- Fujitsu

- Intel

- LSI

- Xio

- And more

More announcements to come in this “wave”.

Demo Equipment

They show some sample equipment from two Original Device Manufacturers (they design and sell into OEMs for rebranding). One with SSD and Infiniband is shown. A more modest one is shown too:

That bottom unit is a 3U cluster in a box with 2 servers and 24 SFF SAS drives. It appears to have additional PCI expansion slots in a compute blade. We see it in a demo later and it appears to have JBOD (mirrored Storage Spaces) and 3 cluster networks.

RDMA aka SMB Direct

Been around for quite a while but mostly restricted to the HPC space. WS2012 will bring it into wider usage in data centres. I wouldn’t expect to see RDMA outside of the data centre too much in the coming year or two.

RDMA enabled NICs also known as R-NICs. RDMA offloads SMB CPU processing in large bandwidth transfers to dedicated functions in the NIC. That minimises CPU utilisation for huge transfers. Reduces the “cost per byte” of data transfer through the networking stack in a server by bypassing most layers of software and communicating directly with the hardware. Requires R-NICs:

- iWARP: TCP/IP based. Works with any 10 GbE switch. RDMA traffic routable. Currently (WS2012 RC) limited to 10 Gbps per NIC port.

- RoCE (RDMA over Converged Ethernet): Works with high-end 10/40 GbE switches. Offers up to 40 Gbps per NIC port (WS2012 RC). RDMA not routable via existing IP infrastructure. Requires DCB switch with Priority Flow Control (PFC).

- InfiniBand: Offers up to 54 Gbps per NIC port (WS2012 RC). Switches typically less expensive per port than 10 GbE. Switches offer 10/40 GbE uplinks. Not Ethernet based. Not routable currently. Requires InfiniBand switches. Requires a subnet manager on the switch or on the host.

RDMA can also be combined with SMB Multichannel for LBFO.

Applications (Hyper-V or SQL Server) do not need to change to use RDMA and make the decision to use SMB Direct at run time.

Partners & RDMA NICs

- Mellanox ConectX-3 Dual Port Adapter with VPI InfiniBand

- Intel 10 GbE iWARP Adapter For Server Clusters NE020

- Chelsio T3 line of 10 GbE Adapters (iWARP), have 2 and 4 port solutions

We then see a live demo of 10 Gigabytes (not Gigabits) per second over Mellanox InfiniBand. They pull 1 of the 2 cables and throughput drops to 6,000 Gigabytes per second. Pop the cable back in and flow returns to normal. CPU utilisation stays below 5%.

Configurations and Building Blocks

- Start with single Cluster in a Box, and scale up with more JBODs and maybe add RDMA to add throughput and reduce CPU utilisation.

- Scale horizontally by adding more storage clusters. Live Migrate workloads, spread workloads between clusters (e.g. fault tolerant VMs are physically isolated for top-bottom fault tolerance).

- DR is possible via Hyper-V Replica because it is storage independent.

- Cluster-in-a-box could also be the Hyper-V cluster.

This is a flexible solution. Manufacturers will offer new refined and varied options. You might find a simple low cost SME solution and a more expensive high end solution for data centres.

Hyper-V Appliance

This is a cluster in a box that is both Scale-Out-File Server and Hyper-V cluster. The previous 2 node Quanta solution is set up this way. It’s a value solution using Storage Spaces on the 24 SFF SAS drives. The space are mirrored for fault tolerance. This is DAS for the 2 servers in the chassis.

What Does All This Mean?

SAN is no longer your only choice, whether you are SME or in the data centre space. SMB Direct (RDMA) enables massive throughput. Cluster-in-a-Box enables Hyper-V appliances and Scale-Out File Servers in ready made kits, that are continuously available and scalable (up and out).

“InfiniBand: Ethernet based. Works with high-end 10/40 GbE switches. Offers up to 40 Gbps per NIC port (WS2012 RC). RDMA not routable via existing IP infrastructure. Requires DCB switch with Priority Flow Control (PFC).

RoCE (RDMA over Converged Ethernet): Offers up to 54 Gbps per NIC port (WS2012 RC). Switches typically less expensive per port than 10 GbE. Switches offer 10/40 GbE uplinks. Not Ethernet based. Not routable currently. Requires InfiniBand switches. Requires a subnet manager on the switch or on the host.”

You have switched the explanation of Inifiniband and RoCE.

Fixed, thanks.

Hi Aidan, Great Blog, very informational. Im loving the idea of a cluster ina box. Im trying to find out who is sell these today? I was hoping that Dell would be entering this market. I think all i see out there is fujitsu? Do you know of any companies selling these right now?

Search my blog and you’ll find some results.