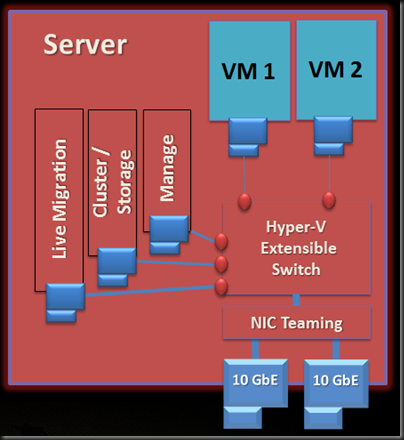

We continue further down the road of understanding converged fabrics in WS2012 Hyper-V. The following diagram illustrates a possible design goal:

Go through the diagram of this clustered Windows Server 2012 Hyper-V host:

- In case you’re wondering, this example is using SAS or FC attached storage so it doesn’t require Ethernet NICs for iSCSI. Don’t worry iSCSI fans – I’ll come to that topic in another post.

- There are two 10 GbE NICs in a NIC team. We covered that already.

- There is a Hyper-V Extensible Switch that is connected to the NIC team. OK.

- Two VMs are connected to the virtual switch. Nothing unexpected there!

- Huh! The host, or the parent partition, has 3 NICs for cluster communications/CSV, management, and live migration. But … they’re connected to the Hyper-V Extensible Switch?!?!? That’s new! They used to require physical NICs.

In Windows Server 2008 a host with this storage would require the following NICs as a minimum:

- Parent (Management)

- VM (for the Virtual Network, prior to the Virtual Switch)

- Cluster Communications/CSV

- Live Migration

All that accumulation of NICs wasn’t a matter of bandwidth. What we really care about in clustering is quality of service: bandwidth when we need it and low latency. Converged fabrics assume we can guarantee those things. If we have those SLA features available to us (more in later posts) then 2 * 10 GbE physical NICs in each clustered hosts might be enough, depending on business and technology requirements of the site. 4 NICs per host … and that’s without NIC teaming. Double the NICs!

The amount of NICs go up. The number of switch ports goes up. The wasted rack space cost goes up. The power bill for all that goes up. The support cost for your network goes up. In truth, the complexity goes up.

NICs aren’t important. Quality communications channels are important.

In this WS2012 converged fabrics design, we can create virtual NICs that attach to the Virtual Switch. That’s done by using the Add-VMNetworkAdapter PowerShell cmdlet, for example:

Add-VMNetworkAdapter -ManagementOS -Name “Manage” -SwitchName External1

… where Manage will be the name of the new NIC and the name of the Virtual Switch is External1. The –ManagementOS tells the cmdlet that the new vNIC is for the parent partition or the host OS.

You can then:

- Configure the vNIC using Set-VMNetworkAdapter

- Specify the VLAN for the vNIC using Set-VMNetworkAdapterVLAN

- Configure IPv4/IPv6

I think configuring the VLAN binding of these NICs with port trunking (or whatever) would be the right way to go with this. That will further isolate the traffic on the physical network. Please bear in mind that we’re still in the beta days and I haven’t had a chance to try this architecture yet.

Armed with this knowledge and these cmdlets, we can now create all the NICs we need that connect to our converged physical fabrics. Next we need to look at securing and guaranteeing quality levels of communications.

Hi,

We are in the process of implementing just such a design, but I have some questions I can’t seem to find a clear answer on:

With regards to the Cluster/Storage Network, what communication/data will be on this network, and will the amount of comms/data utilized on this network be high ?

Thanx in advance.

Cluster network: heartbeat (low bandwidth) and redirected IO for metadata operations.

Sir, I have created a virtual adapter and virtual switch in the parent (host) partition, can you tell me how I would go about removing these? The only commands in powershell that relate to removal of virtual adapters and virtual switches are for ones in a VM, but what about removing them from the Host or partition using powershell?

thank you in advance

Use the -managementos flags in the remove- cmdlets.

Great walk through! Do you know if any progress been made to improve the ~3Gb/s speed limitation for Virtual Network Adapters on a 10Gb/s converged Virtual Switch? Reference: http://blogs.technet.com/b/kevinholman/archive/2013/07/01/hyper-v-live-migration-and-the-upgrade-to-10-gigabit-ethernet.aspx. Thanks!