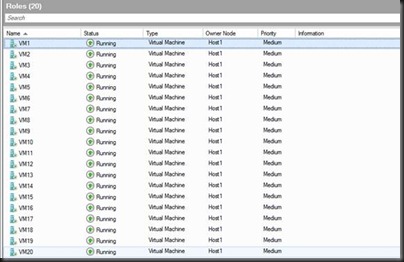

I have the lab at work set up. The clustered hosts are actually quite modest, with just 16 GB RAM at the moment. That’s because my standalone System Center host has more grunt. This WS2012 Beta Hyper-V cluster is purely for testing/demo/training.

I was curious to see how fast Live Migration would be. In other words, how long would it take me to vacate a host of it’s VM workload so I could perform maintenance on it. I used my PowerShell script to create a bunch of VMs with 512 MB RAM each.

Once I had that done, I would reconfigure the cluster with various different speeds and configuration fro the Live Migration network:

- 1 * 1 GbE

- 1 * 10 GbE

- 2 * 10 GbE NIC team

- 4 * 10 GbE NIC team

For each of these configurations, I would time and capture network utilisation data for migrating:

- 1 VM

- 10 VMs

- 20 VMs

I had configured the 2 hosts to allow 20 simultaneous live migrations across the Live Migration network. This would allow me to see what sort of impact congestion would have on scale out.

Remember, there is effectively zero downtime in Live Migration. The time I’m concerned with includes the memory synchronisation over the network and the switch over of the VMs from one host to another.

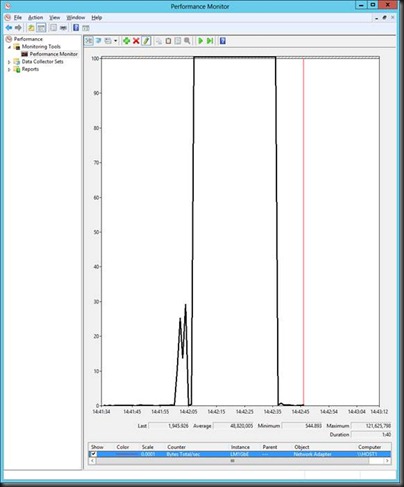

1GbE

- 1 VM LM

- 7 seconds to LM

- Maximum transfer: 119,509,089 bytes/sec

- 10 VMs

- 40 seconds

- Maximum transfer: 121,625,798 bytes/sec

- 20 VMs

- 80 Seconds

- Maximum transfer: 122,842,926 bytes/sec

Note: Notice how the utilisation isn’t increasing through the 3 tests? The bandwidth is fully utilised from test 1 onwards. 1 GbE isn’t scalable.

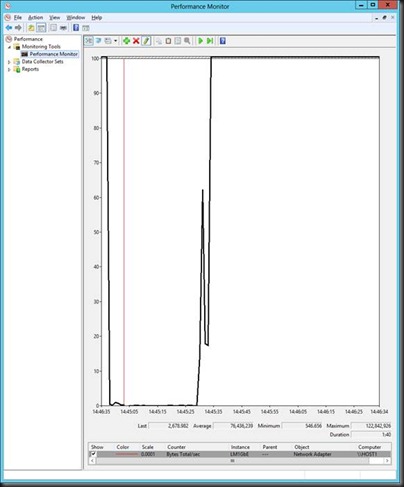

1 * 10 GbE

- 1 VM

- 5 seconds

- Maximum transfer: 338,530,495 bytes/sec

- 10 VMs

- 13 seconds

- Maximum transfer: 1,761,871,871 bytes/sec

- 20 VMs

- 21 seconds

- Maximum transfer: 1,302,843,196 bytes/sec

Note: See how we can push through much more data at once? The host was emptied in 1/4 of the time.

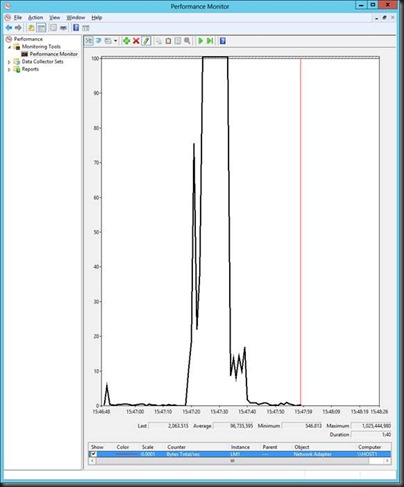

2 * 10 GbE

- 1 VM

- 5 seconds

- Maximum transfer: 338,338,532 bytes/sec

- 10 VMs

- 14 Seconds

- Maximum transfer: 961,527,428 bytes/sec

- 20 VMs

- 21 seconds

- Maximum transfer: 1,032,138,805 bytes/sec

4 * 10 GbE

- 1 VM

- 5 seconds

- Maximum transfer: 284,852,698 bytes/sec

- 10 VMs

- 12 seconds

- Maximum transfer: 1,090,935,398 bytes/sec

- 20 VMs

- 21 seconds

- Maximum transfer: 1,025,444,980 bytes/sec

Comparison of Time Taken for Live Migration

What this says to me is that I hit my sweet spot when I deployed 10 GbE for the Live Migration network. Adding more bandwidth did nothing because my virtual workload was “too small”. If I had more memory I could get more interesting figures.

While 1 * 10 GbE NIC would be the sweet spot, I would use Windows Server 2012 NIC teaming for fault tolerance, and I’d get 20 GbE aggregate bandwidth with 10 GbE fault tolerant bandwidth.

Comparison of Bandwidth Utilisation

I have no frickin’ idea how to interpret this data. Maybe I need more tests. I only did 1 run of each test. Really I should have done 10 of each test and averaged/standard deviation or something. But somehow, across all three the 10 GbE combination tests, data throughput dropped once we had 20 GbE. Very curious!

Summary

The days of 1 GbE are numbered. Hosts are getting more dense, and you should be implementing these hosts with 10 GbE networking for their Live Migration networks. This data shows how in my simple environment with 16 GB RAM hosts, I can do host maintenance in no time. With VMM Dynamic Optimization, I can move workloads in seconds. Imagine accidentally deploying 192 GB RAM hosts with 1 GbE Live Migration networks.

Great test Aidan. Did you by chance also capture host CPU utilization during the LMs?

Unfortunately no. Looks like I’m getting more RAM so I’ll hopefully have another run in a few weeks.

Good stuff. Wonder if the teaming adds a bit of overhead hurting the 20gbe results slightly? Curious, which 10gbe card(s) are you using?

Hi Wes,

Sorry for very very late response: NC552SFP NICs.