Live Migration was the big story in Windows Server 2008 R2 Hyper-V RTM and in WS2012 Hyper-V it continues to be a big part of a much BIGGER story. Some of the headline stuff about Live Migration in Windows Server 2012 Hyper-V was announced at Build in September 2012. The big news was that Live Migration was separated from Failover Clustering. This adds flexibility and agility (2 of the big reasons beyond economics why businesses have virtualised) to those who don’t want to or cannot afford clusters:

- Small businesses or corporate branch offices where the cost of shared storage can be prohibitive

- Hosting companies where every penny spent on infrastructure must be passed onto customers in one way or another, and every time the hosting company spends more than the competition they become less competitive.

- Shared pools of VDI VMs don’t always need clustering. Some might find it acceptable if a bunch of pooled VMs go offline if a host crashes, and the user is redirected to another host by the broker.

Don’t get me wrong; Clustered Hyper-V hosts are still the British Airways First Class way to travel. It’s just that sometimes the cost is not always justified, even though the SMB 3.0 and Scale Out File Server story brings those costs way down in many scenarios where the hardware functions of SAS/iSCSI/FC SANs aren’t required.

Live Migration has grown up. In fact, it’s grown up big time. There are lots of pieces and lots of terminology. We’ll explore some of this stuff now. This tiny sample of the improvements in Windows Server 2012 Hyper-V shows how much work the Hyper-V group have done in the last few years. And as I’ll show you next, they are not taking any chances.

Live Migration With No Compromises

Two themes have stood out to me since the Build announcements. The first theme is “there will be no new features that prevent Hyper-V”. In other words, any developer who has some cool new feature for Microsoft’s virtualisation product must design/write it in such a way that it allows for uninterrupted Live Migration. You’ll see the evidence of this as you read more about the new features of Windows Server 2012 Hyper-V. Some of the methods they’ve implemented are quite clever.

The second and most important theme is “always have a way back”. Sometimes you want to move a VM from one host to another. There are dependencies such as networking, storage, and destination host availability. The source host has no control over these. If one dependency fails, then the VM cannot be lost, leaving the end users to suffer. For that reason, new features always try to have a fallback plan where the VM can be left running on the source host if the migration fails.

With those two themes in mind, we’ll move on.

Tests Are Not All They Are Cracked Up To Be

The first time I implemented VMware 3.0 was on a HP blade farm with an EVA 8000. Just like any newbie to this technology (and to be honest I still do this with Hyper-V because it’s a reassuring test of the networking configurations that I have done) I created a VM and did a live migration (vMotion) of a VM from one host to another while doing a Ping test. I was saddened to see 1 missed ping during the migration.

What exactly did I test? Ping is an ICMP tool that is designed to have very little tolerance of faults. Of course there is little to no tolerance; it’s a network diagnostic tool that is used to find faults and packet loss. Just about every application we use (SMB, HTTP, RPC, and so on) are TCP based. TCP (or Transmission Control Protocol) is designed to handle small glitches. So where Ping detects a problem, something like a file copy or streaming media might have a bump in the road that we humans probably won’t perceive. And event applications that use UDP, such as the new RemoteFX in Windows Server 2012, are built to be tolerant of a dropped packet if it should happen (they choose UDP instead of TCP because of this).

Long story, short: Ping is a great test but bear in mind that you have a strong chance of seeing just 1 packet will slightly increased latency or even a single missed ping. The eyeball test with a file copy, an RDP session, or a streaming media session is the real end user test.

Live Migration – The Catchall

The term Live Migration is used as a big of a catchall In Windows Server 2012 Hyper-V. To move a VM from one location to another, you’ll start off with a single wizard and then have choices.

Live Migration – Move A Running VM

In Windows Server 2008 R2, we had Live Migration built into Failover Clustering. A VM had two components: it’s storage (VHDs usually) and it’s state (processes and memory). Failover Clustering would move responsibility for both the storage and state from one host to another. That still applies in a Windows Server 2012 Hyper-V cluster, and we can still do that. But now, at it’s very core, Live Migration is the movement of the state of a VM … but we can also move the storage as you’ll see later.

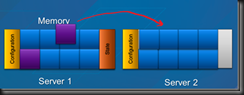

AFAIK, how the state moves hasn’t really changed because it works very well. A VM’s state is it’s configuration (think of it as the specification, such as processor and memory), it’s memory contents, and it’s state (what’s happening now).

The first step is to copy the configuration from the source host to the destination host. Effectively you now have a bank VM sitting on the destination host, waiting for memory and state.

Now the memory of the VM is copied, one page at a time from the source host while the VM is running. Naturally, things are happening on the running VM and it’s memory is changing. Any previously copied pages that subsequently change are marked as dirty so that they can be copied over again. Once copied, they are marked as clean.

Eventually you get to a point where either everything is copied or there is almost nothing left (I’m simplifying for brevity – brevity! – me! – hah!). At this point, the VM is paused on the source host. Start the stopwatch because now we have “downtime”. The state, which is tiny, is copied from the source host to the VM in the destination host. The now complete VM on the destination is not complete. It is placed “back” into a running state, and the VM in the source site is removed. Stop the stopwatch. Even in a crude lab, at most I miss is one ping here, and as I stated earlier, that’s not enough to impact applications.

And that’s how Live Migration of a running VM works without getting to bogged down in the details.

Live Migration on a Cluster

The process for Live Migration of a VM is simple enough:

- The above process happens to get a VM’s state from the source host to the destination host.

- As a part of the switch over, responsibility for the VM’s files on the shared storage is passed from the source host to the destination host.

This combined solution is what kept everything pretty simple from our in-front-of-the-console perspective. Things get more complicated with Windows Server 2012 Hyper-V because Live Migration is now possible without a cluster.

SMB Live Migration

Thanks to SMB 3.0 with it’s multichannel support, and added support for high-end hardware features such as RDMA, we can consider placing a VM’s files on a file share.

The VMs continue to run on Hyper-V hosts, but when you inspect the VMs you’ll find their storage paths are on a UNC path such as \FileServer1VMs or \FileServerCluster1VMs. The concept here is that you an use a more economic solution to store your VMs on a shared storage solution, with full support for things like Live Migration and VSS backup. I know you’re already questioning this, but by using multiple 1 Gbps or even 10 Gbps NICs with multichannel (SMB 3.0 simultaneously routing file share traffic over multiple NICs without NIC teaming) then you can get some serious throughput.

There are a bunch of different architectures which will make for some great posts at a later point. The Hyper-V hosts (in the bottom of the picture) can be clustered or not clustered.

Back to Live Migration, and this scenario isn’t actually that different to the Failover Cluster model. The storage is shared, with both the source and destination hosts having file share and folder permissions to the VM storage. Live Migration happens, and responsibility for files is swapped. Job done!

Shared Nothing Live Migration

This is one scenario that I love. I wish I’d had it when I was hosting with Hyper-V in the past. It gives you mobility of VMs across many non-clustered hosts without storage boundaries.

In this situation we have two hosts that are not clustered. There is no shared storage. VMs are storage on internal disk. For example, VM1 could be on the D: drive of HostA, and we want to move it to HostB.

A few things make this move possible:

- Live Migration: we can move the running state of the VM from HostA to HostB using what I’ve already discussed above.

- Live Storage Migration: Ah – that’s new! We had Quick Storage Migration in VMM 2008 R2 where we could relocate a VM with a few minutes of downtime. Now we get something new in Hyper-V with zero downtime. Live Storage Migration enables us to relocate the files of a VM. There’s two options: move all the files to a single location, or we can choose to relocate the individual files to different locations (useful if moving to a more complex storage architecture such as Fasttrack).

The process of Live Storage Migration is pretty sweet. It’s really the first time MSFT has implemented it, and the funny thing is that they created it while, at the same time, VMware was having their second attempt (to get it right) at vSphere 5.0 Storage vMotion.

Say you want to move a VM’s storage from location A to location B. The first step done is to copy the files.

IO operations to the source VHD are obviously continuing because the VM is still running. We cannot just flip the VM over after the copy, and lose recent actions on the source VHD. For this reason, the VHD stack simultaneously writes to both the source and destination VHDs as the copy process is taking place.

Once the VHD is successfully copied, the VM can switch IO so it only targets the new storage location. The old storage location is finished with, and the source files are removed. Note that they are only removed after Hyper-V knows that they are no longer required. In other words, there is a fall back in case something goes wrong with the Live Storage Migration.

Note that both hosts must be able to authenticate via Kerberos, i.e. domain membership.

Bear this in mind: Live Storage Migration is copying and synchronising a bunch of files, and at least one of them (VHD or VHDX) is going to be quite big. There is no way to escape this fact; there will be disk churn during storage migration. It’s for that reason that I wouldn’t consider doing Storage Migration (and hence Shared Nothing Storage Migration) every 5 minutes. It’s a process that I can use in migration scenarios such as storage upgrade, obsoleting a standalone host, or planned extended standalone host downtime.

Back to the scenario of Live Migration without shared storage. We now have the 2 key components and all that remains is to combine and order them:

- Live Storage Migration is used to replicate and mirror storage between HostA and HostB. This mirror is kept in place until the entire Shared Nothing Live Migration is completed.

- Live Migration copies the VM state from HostA to HostB. If anything goes wrong, the storage of the VM is still on HostA and Hyper-V can fall back without losing anything.

- Once the Live Migration is completed, the storage mirror can be broken, and the VM is removed from the source machine, HostA.

Summary

There is a lot of stuff in this post. There are a few things to retain from this post:

- Live Migration is a bigger term than it was before. You can do so much more with VM mobility.

- Flexibility & agility are huge. I’ve always hated VMware Raw Device Mapping and Hyper-V Passthrough disks. The much bigger VHDX is the way forward (score for Hyper-V!) because

it offers scale and unlimited mobility. - It might read like I’ve talked about a lot of technologies that make migration complex. Most of this stuff is under the covers and is revealed through a simple wizard. You simply want to move/migrate a VM, and then you have choices based on your environment.

- You will want to upgrade to Windows Server 2012 Hyper-V.

Hi Aidan,

The new LM features seem pretty promising! I just have a question – in your opinion, will SMB Live Migration be an alternative to a Hyper-V cluster on top of iSCSI (with performance in mind)?

Sort of. It would be OK for -planned- maintenance windows. But it would not be a good -unplanned- solution. To be honest, if a customer is deploying 4 or more VMs, then to keep legal they should be using Windows Server Enterprise which gives clustering. Alternatively they should use the free Hyper-V Server which includes clustering.

If iSCSI storage costs are the issue, then consider building Hyper-V clusters using SMB 2.2 and multichannel. It looks like this with a pair of 1 Gbps NICs should perform quite well. Then you have all the bells and whistles of a cluster.

Thanks! It’s not the iscsi costs that bother me – actually, I’d be using the built-in initiator and WSS target and the hw is the same. What I am thinking about is provisioning – copying between iscsi lun and an smb share will pass through the network (no matter that both they stay on the same server) while copying between smb shares (again, on the same “storage” server) will pass through the storage subsystem. I’ve found a test results article that says that smb 2.0 is almost identical to iscsi (regarding performance) – you can find it here – https://communities.netapp.com/community/netapp-blogs/msenviro/blog/2011/09/29/looking-ahead-hyper-v-over-smb-vs-iscsi. Anyway, I just wanted to know if you heard/saw something regarding any difference of performance between those two options. If there is no significant, I think I will go the SMB 2.2 way.

OK, looks like you mixed up terminology; SMB Live Migration is a VM movement method, not a storage method.

SMB 2.2 file shares with multichannel should match/exceed the performance of iSCSI but I’ve yet to see real numbers on that. It certainly would be cheaper, and I’ve heard that with 10 Gbps the disk can become the bottleneck 🙂

Nah, I understand the difference between SMB and LM 🙂 Just referred to the section in your post with the “LM over SMB” 😉

Anyway, thanks for the info in your last comment – that’s what I was looking for. And I am definitely setting up my 9 node cluster (for test/dev purposes) with WS8 and SMB2.2 storage 🙂 I just can’t wait for the HW to come…

Thanks again!

“Note that both hosts must be able to authenticate via Kerberos, i.e. domain membership.” Is that true? In the LIve Migration settings, there’s mention of being able to use CredSSP or Kerberos. I’m trying to test with 2 workgroup “servers”. It’s not working for me, but I could have other problems.

Yes, it’s true. Kerberos/CredSSP changes where you can manage Live Migration and the need to do CIFS delegation.

After setting up a test environment with 2 hyperv server in a cluster, and one SMB storage server, when live migrating i’m getting about 10 lost ping packets.

anyone have any ideas?

Hi Aidan, in this post you are mentioned the possibility to lose a ping during Live Migration, I am perfectly agreed that this is not a good test, because It should be better to test Live Migration with application such as RDP and so on, but in the case of my customer I have some legacy application that can have big problems, if during live migration (one or two packets of ping was lose) this means that the same test with vSphere (5.5) is very different because I do not lose any ping packets during live motion. Is this a limit of Hyper-v (versione 2012) in this type of VM motion? onestly it’s difficult to find this information on technet, for this reason I refer to you, many thanks in advance for reply

Actually, I’ve never seen a vMotion happen without losing a ping – they’re both pretty much doing the same thing to move VMs. It’s going to be hardware and load on that hardware that causes the “issue”.

Hi Aidan, I have a huge problem with a Client due a problem with a Lun frona a NAS device that was working with Hyper-V into Windows Server 2012 R2.

We moved a VM using this feature into Hyper-v manager but when the Hyper-V host was trying to move the Lun crash in the process.

We tried to remap the lun to the server but it is empty.

We lost all VM running into this lun at the moment

There is a way to recover this?

If the LM was not finished then the VM should be fine in the original location. Otherwise, curse the storage vendor and go to backup.