I’ve just been woken up from my first decent sleep (jetlag) by my first ever earthquake (3.5) and I got to thinking … yesterday (Hyper-V/Private Cloud day) was incredible. Normally when I live blog I can find time to record what’s “in between the lines” and some of the spoken word of the presenter. Yesterday, I struggled to take down the bullet points from the slides; there was just so much change being introduced. There wasn’t any great detail on any topic, simply because there just wasn’t time. One of the cloud sessions ran over the allotted time and they had to skip slides.

I think some things are easy to visualise and comprehend because they are “tangible”. Hyper-V Replica is a killer headline feature. The increase host/cluster scalability gives us some “Top Gear” stats: just how many people really have a need for a 1,000 BHP car? And not many of us really need 63 host clusters with 4,000 VMs. But I guess Microsoft had an opportunity to test and push the headline ahead of the competition, and rightly took it.

Speaking of Top Gear metrics, one interesting thing was that the vCPU:pCPU ratiio of 8:1 was eliminated with barely a mention. Hyper-V now supports as many vCPUs as you can fit on a host without compromising VM and service performance. That is excellent. I once had a quite low end single 4 core CPU host that was full (memory, before Dynamic Memory) but CPU only averaged 25%. I could have reliably squeezed on way more VMs, easily exceeding the ratio. The elimination of this limit by Hyper-V will further reduce the cost of virtualisation. Note that you still need to respect the vCPU:pCPU ratio support statements of applications that you virtualise, e.g. Exchange and SharePoint, because an application needs what it needs. Assessment, sizing, and monitoring are critical for squeezing in as much as possible without compromising on performance.

The lack of native NIC Teaming was something that caused many concerns. Those who needed it used the 3rd party applications. That caused stability issues, new security issues (check using HP NCU and VLANing for VM isolation), and I also know that some Microsoft partners saw it as enough of an issue to not recommend Hyper-V. The cries for native NIC teaming started years ago. Next year, you’ll get it in Windows 8 Server.

One of the most interesting sets features is how network virtualisation has changed. I don’t have the time or equipment here in Anaheim to look at the Server OS yet, so I don’t have the techie details. But this is my understanding of how we can do network isolation.

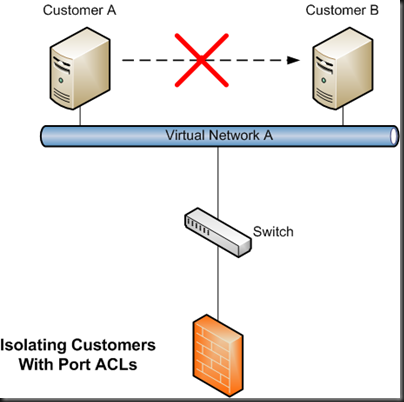

Firstly, we are getting Port ACLs (access control lists). Right now, we have to deploy at least 1 VLAN per customer or application to isolate them. N-tier applications require multiple VLANs. My personal experience was that I could deploy customer VMs reliably in very little time. But I had to wait quite a while for one or more VLANs to be engineered and tested. It stressed me (customer pressure) and it stressed the network engineers (complexity). Network troubleshooting (Windows Server 8 is bringing in virtual network packet tracing!) was a nightmare, and let’s not imagine replacing firewalls or switches.

Port VLANs will allow us to say what a VM can or cannot talk to. Imagine being able to build a flat VLAN with hundreds or thousands of IP addresses. You don’t have to subnet it for different applications or customers. Instead, you could (in theory) place all the VMs in that one VLAN and use Port ACLs to dictate what they can talk to. I haven’t seen a demo of it, and I haven’t tried it, so I can’t say more than that. You’ll still need an edge firewall, but it appears that Port ACLs will isolate VMs behind the firewall.

Port ACLs have the potential to greatly simplify physical network design with fewer VLANs. Equipment replacement will be easier. Troubleshooting will be easier. And now we have greatly reduced the involvement of the network admins; their role will be to customise edge firewall rules.

Secondly we have the incredibly hard to visualise network or IP virtualisation. The concept is that a VM or VMs are running on network A, and you want to be able to move them to a different network B, but they want to do it without changing IP address or downtime. The scenarios include:

- A company’s network is being redesigned as a new network with new equipment.

- One company is merging with another, and they want to consolidate the virtualisation infrastructures.

- A customer is migrating a virtual machine to a hoster’s network.

- A private cloud or public cloud administrator wants to be able to move virtual machines around various different networks (power consolidation, equipment replacement, etc) without causing downtime.

Any of these would normally involve an IP address change. You can see above that the VMs (10.1.1.101 and 10.1.1.102) are on Network A with IPs in the 10.1.1.0/24 network. That network has it’s own switches and routers. The admins want to move the 10.1.1.101 VM to the 10.2.1.0/24 network which has different switches and routers.

Internet DNS records, applications (that shouldn’t, but have) hard coded IP addresses, other integrated services, all depend on that static IP address. Changing that on one VM would cause mayhem with accusatory questions from the customer/users/managers/developers that make you out to be either a moron or a saboteur. Oh yeah; it would also cause business operations downtime. Changing an IP address like that is a problem. In this scenario, 10.1.1.102 would lose contact with 10.1.1.101 and the service they host would break.

Today, you make the move and you have a lot of heartache and engineering to do. Next year …

Network virtualisation abstracts the virtual network from the physical network. IP address virtualisation does similar. The VM that was moved still believes it is on 10.1.1.101. 10.1.1.102 can still communicate with the other VM. However, the moved VM is actually on the 10.2.1.0/24 network as 10.2.1.101. The IP address is virtualised. Mission accomplished. In theory, there’s nothing to stop you from moving the VM to 10.3.1.0/24 or 10.4.1.0/24 with the same successful results.

How important is this? I worked in the hosting industry and there was a nightmare scenario that I was more than happy to avoid. Hosting customers pay a lot of money for near 100% uptime. They have no interest in, and often don’t understand, the intricacies of the infrastructure. They pay not to care about it. The host hardware, servers and network, had 3 years of support from the manufacturer. After that, replacement parts would be hard to find and would be expensive. Eventually we would have to migrate to a new network and servers. How do you tell customers, who have applications sometimes written by the worst of developers, that they could have some downtime and then that there is a risk that their application would break because of a change of IP. I can tell you the response: they see this as being caused by the hosting company and any work the customers need to pay for to repair the issues will be paid by the hosting company. And there’s the issue. IP address virtualisation with expanded Live Migration takes care of that issue.

For you public or private cloud operators, you are getting metrics that record the infrastructure utilisation of individual virtual machines. Those metrics will travel with the virtual machine. I guess they are stored in a file or files, and that is another thing you’ll need to plan (and bill) for when it comes to storage and storage sizing (it’ll probably be a tiny space consumer). These metrics can be extracted by a third party tool so you can analyse them and cross charge (internal or external) customers.

We know that the majority of Hyper-V installations are smaller, with the average cluster size being 4.78 hosts. In my experience, many of these have a Dell Equalogic or HP MSA array. Yes, these are the low end of hardware SANs. But they are a huge investment for customers. Some decide to go with software iSCSI solutions which also add cost. Now it appears like those lower end clusters can use file shares to store virtual machines with support from Microsoft. NIC teaming with RDMA gives massive data transport capabilities and gives us a serious budget solution for VM storage. The days of the SAN aren’t over: they still offer functionality that we can’t get from file shares.

I’ve got more cloud and Hyper-V sessions to attend today, including a design one to kick off the morning. More to come!

Great work Aidan! Watched the webcast with the migration over a 1gig nic from local storage to a file share…pretty impressive. Looking forward to more news! VCP.. soon to be MCITP-Hyper-V!