Let’s pull a Doctor Who and travel back in time to 2003. Odds are when you bought a server, and you were taking the usual precautions on uptime/reliability, you specified that the server should have dual power supplies. The benefit of this is that a PSU could fail (it used to be #3 in my failure charts) but the redundant PSU would keep things running along.

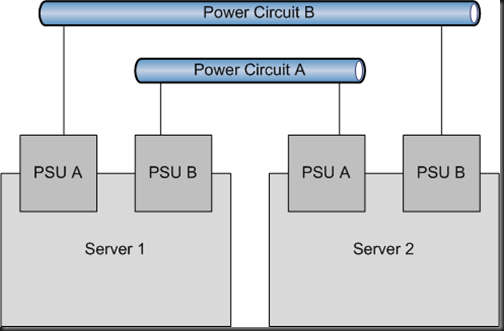

Furthermore, an electrician would provide two independent power circuits to the server racks. PSU A in each server would go into power circuit A, and PSU B in each server would go into power circuit B. The benefit of this is that a single power circuit could be brought down/fail but every server would stay running, because the redundant PSU would powered by the alternative power circuit.

Applying this design now is still the norm, and is probably what you plan when designing a private cloud compute cluster. If power circuit A goes down, there is no downtime for VMs on either host. They keep on chugging away.

Nothing is free in the computer room/data centre. In fact, everything behind those secured doors costs much more than out on the office floors. Electrician skills, power distribution networks, PSU’s for servers, the electricity itself (thanks to things like the UPS), not to mention the air conditioning that’s required to keep the place cool. My experience in the server hosting industry taught me that the cost of biggest concern was electricity. Every decision we made had to consider electricity consumption.

It’s not a secret that data centres are doing everything that they can to eliminate costs. Companies in the public cloud (hosting) industry are trimming costs because they are in a cutthroat business where the sticker price is often the biggest decision making factor for the customers when they choose a service provider. We’ve heard data centres running at 30C instead of the traditional 18-21C … I won’t miss having to wear a coat when physically touching servers in the middle of summer. Some are locating their data centres in cool-moderate countries (Ireland & Iceland come to mind) because they can use native air without having to cool it (and avoiding the associated electrical costs). There are now data centres that take the hot air from the “hot aisle” and use that to heat offices or water for the staff in the building. Some are building their own power supplies, e.g. solar panel farms in California or wind turbines in Sweden. It doesn’t have to stop there; you can do things in the micro level.

You can choose equipment that consumes less power. Browsing around on the HP website quickly finds you various options for memory boards. Some consume less electricity. You can be selective about networking appliances. I remember when buying a slightly higher spec model of switch than we needed because it consumed 40% less electricity than a lesser model. And get this: some companies are deliberately (after much planning) choosing lower capacity processors based on a couple of factors.

- They know that they can get away with providing less CPU muscle.

- They are deliberately choosing to put less VMs on a host than is possible because their “sweet spot” cost calculations took CPU power consumption and heat generation costs into account.

- Having more medium capacity hosts works out cheaper for them than having fewer larger hosts over X years, because of the lower power costs (taking everything else into account).

Let’s bring it back to our computer room/ data centre where we’re building a private cloud. What do we do? Do we do “the usual” and build our virtualisation hosts just like we always have built servers: each host will get dual PSUs on independent power circuits just as above? Or do we think about the real costs of servers? I’ve previously mentioned that the true cost of a server is not the purchase cost. It’s much more than that, including purchase cost, software licensing, and electricity. A safe rule of thumb is that if a server costs €2,000 then it’s going to cost at least €2,000 to power it over its 3 year life time.

So this is when some companies compare cost of running fully specced and internally redundant (PSU’s etc) servers versus the risk of having brief windows of downtime. Taking this into account, they’ll approach building clusters in alternative ways.

In the first diagram (above) we had a 2 node Hyper-V cluster, with the usual server build including 2 PSUs. Now we’re simplifying the hosts. They’ve each got one PSU. To provide power circuit fault tolerance, we’ve doubled the number of hosts. In theory, this should reduce our power requirements and costs. It does double the rack space, license, and server purchase costs, but for some companies, this is negated by reduce power costs; the magic is in the assessment.

But we need more hosts. We can’t do an N+1 cluster. This is because half of the hosts are on power circuit A. If that circuit goes down then we lose half of the cluster. Maybe we need an N+N cluster? In other words if we have 2 active hosts, then we have 2 passive hosts. Or maybe we extend this out again, to N+N+N with power circuits A, B, and C. That way we would lose 1/3 of a cluster if the power goes.

Increasing the number hosts to give us power fault tolerance gives us the opportunity to spread the virtual machine loads. That in turn means you need less CPU and memory in each host, in turn reducing the total power requirements of those hosts and reduces the cost impact of buying more server chassis’.

The downside of this approach is that if you do power to PSU A in Server 1, the VMs will stop executing, and failover to Severs 3 or 4.

I’m not saying this is the right way for everyone. It’s just an option to consider, and run through Excel with all the costs to hand. You will have to consider that there will be a brief amount of downtime for VMs (they will failover and boot up on another host) if you lose a power circuit. That wouldn’t happen if each host has 2 PSUs, each on different power circuits. But maybe the reduced cost (if really there) would be worth the risk of a few minutes downtime?